I was using a command to fetch a pdf and format it asynchronously. This is the command:

async def ext_command(self, ctx:interactions.CommandContext, page: int = None):

await ctx.defer(ephemeral=False)

loop = asyncio.get_running_loop()

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

r = await response.content.read()

chunk = await loop.run_in_executor(None, self.pdf_process, r, page)

for i in range(0,len(chunk)):

await ctx.send(chunk[i])

And this is for pdf processing:

def pdf_process(self, r, page):

with pdfplumber.open(BytesIO(r)) as pdf:

if page != None:

extracted_page = pdf.pages[page-1].extract_text()

else:

current_day = datetime.datetime.now().timetuple().tm_yday

current_month = datetime.date.today().month

page = current_day + current_month + 7

year = datetime.date.today().year

if (year % 4 == 0 and year % 100 != 0) or year % 400 == 0:

page -= 1

req_page = pdf.pages[page-1]

extracted_page = req_page.extract_text()

del req_page._objects()

del req_page._layout()

req_page.flush_cache()

req_page.get_text_layout.cache_clear()

gc.collect()

chunklength = 1900

indexes = []

chunk = ''

label = 0

indexes = [i for i, letter in enumerate(extracted_page) if letter == '\n']

for i in range(0,len(indexes)):

if extracted_page[indexes[i]-1] == ' ':

if extracted_page[indexes[i]-2] == '.' or extracted_page[indexes[i]-2] == '"' or extracted_page[indexes[i]-2] == '!' or extracted_page[indexes[i]-2] == ' ' or extracted_page[indexes[i]-2] == '?':

if label == 0:

label = indexes[i]

chunk = extracted_page[:indexes[i]] + '\n'

else:

chunk += extracted_page[label:indexes[i]] + '\n'

label = indexes[i]

chunk += extracted_page[label:len(extracted_page)-1]

chunks = [chunk[i:i+chunklength] for i in range (0, len(chunk), chunklength)]

return chunks

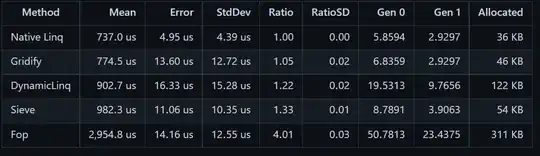

I checked out the memory leak issue at the github page and made changes (added req_page.flush_cache() and req_page.get_text_layout.cache_clear() and it shows no change whatsoever. I used memory_profiler at pdf_process() and this is what it shows:

As it shows at line 27 and 28, the taken up memory is never released and with each subsequent run of this particular command, the memory keeps on growing.

As it shows at line 27 and 28, the taken up memory is never released and with each subsequent run of this particular command, the memory keeps on growing.

tldr; using pdfplumber in a discord bot, memory consumption going up with each command run, tried using fix at its github, no luck.

Please help