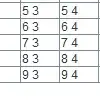

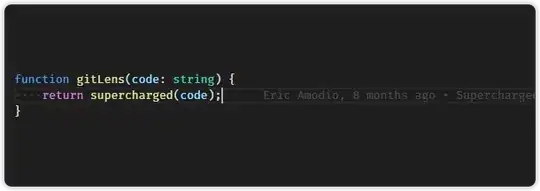

In Snowpark (Python API, version 0.11.0), I try to order an Dataframe according to an attribute COUNT_OBJ, then show the top 5 EVENTDATES. I realized that the subsequent "select" destroys the ordering of the Dataframe. Is that to be expected?

As a longterm spark developer, this is an unexpected behavior

EDIT: More output as requested in comments: