I am trying to fine tune a roberta model for sentiment analysis. I have downloaded this model locally from huggingface.

Below is my code for fine tunning: # dataset is amazon review, the rate goes from 1 to 5

electronics_reivews = electronics_reivews[['overall','reviewText']]

model_name = 'twitter-roberta-base-sentiment'

tokenizer = AutoTokenizer.from_pretrained(model_name, num_labels=3)

class Dataset(torch.utils.data.Dataset):

def __init__(self, data):

self.labels = [int(x) for x in data['overall']]

self.texts = [tokenizer(text_preprocessing(text), padding='max_length', max_length = 512, truncation=True, return_token_type_ids=True, return_tensors="pt")

for text in data['reviewText']]

def classes(self):

return self.labels

def __len__(self):

return len(self.labels)

def get_batch_labels(self, idx):

return np.array(int(self.labels[idx]))

def get_batch_texts(self, idx):

return self.texts[idx]

def __getitem__(self, idx):

text = self.get_batch_texts(idx)

target = self.get_batch_labels(idx)

return text, target

class RobertaClassifier(nn.Module):

def __init__(self):

super(RobertaClassifier, self).__init__()

self.roberta = AutoModelForSequenceClassification.from_pretrained(model_name)

self.l2 = torch.nn.Linear(768, 3)

def forward(self, input_id, mask):

out = self.roberta(input_ids= input_id, attention_mask=mask, return_dict=False)

return self.l2(out)

def train(model, train_data, learning_rate, epochs):

train = Dataset(train_data)

train_dataloader = torch.utils.data.DataLoader(train, batch_size=6, shuffle=True)

use_cuda = torch.cuda.is_available()

loss_function = nn.CrossEntropyLoss()

optimizer = Adam(model.parameters(), lr= learning_rate)

if use_cuda:

model = model.cuda()

loss_function = loss_function.cuda()

for epoch_num in range(epochs):

total_acc_train = 0

total_loss_train = 0

for data, label in tqdm(train_dataloader):

optimizer.zero_grad()

targets = label.to(device, dtype=torch.long)

mask = data['attention_mask'].to(device, dtype=torch.long)

input_id = data['input_ids'].squeeze(1).to(device, dtype=torch.long)

model.zero_grad()

predictions = model(input_id, mask)

batch_loss = loss_function(predictions, targets)

total_loss_train += batch_loss.mean().item()

_, pred_classes = torch.max(predictions, dim=1)

batch_loss.mean().backward()

clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

total_acc_train += torch.sum(pred_classes==targets)

else:

torch.save(model.state_dict(), f'models/eng_sent{epoch_num}_.pt')

print('Epochs: ', epoch_num + 1, ' Train Loss: ', total_loss_train / len(train_data), ' | Train Accuracy: ', total_acc_train / len(train_data) )

EPOCHS = 6

model = RobertaClassifier()

model = nn.parallel.DataParallel(model, device_ids=[0,1,2,3,4,5,6,7,8])

model.to(device)

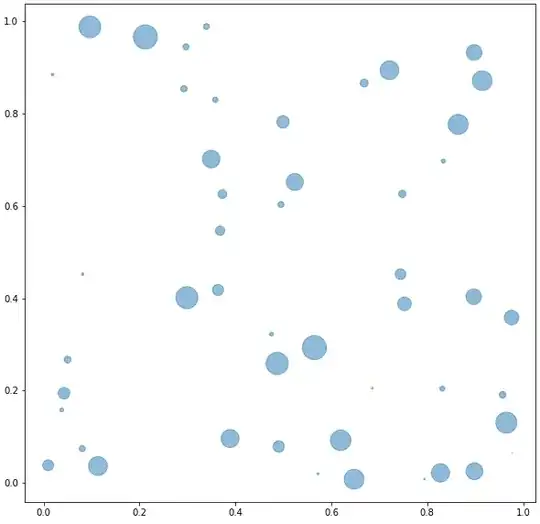

The error I am getting is in the image  .

.

When I change the output of the roberta layer to: return self.l2(out)

I get this error instead, TypeError: linear(): argument 'input' (position 1) must be Tensor, not tuple

The tensor shape I am getting from roberta layer in the forward function is:

(tensor([[-2.4036, 1.0603, 1.3976],

[-1.6627, 1.4847, -0.0503],

[-1.9332, 0.6074, 1.5003],

[-1.8939, 0.3645, 1.6951]], device='cuda:0', grad_fn=<AddmmBackward>),)

If I use out[0] I get this :

tensor([[-0.6168, -0.2711, 0.0749, ..., -0.0882, -0.3910, 0.7054],

[-0.6988, -0.2166, -0.7000, ..., -0.7296, -0.5313, 0.8282],

[-0.7566, -0.3149, -0.3319, ..., -0.0722, -0.5641, 0.7540],

[-0.8619, -0.3192, -0.2780, ..., -0.0820, -0.6005, 0.9199]],

device='cuda:3', grad_fn=<TanhBackward>)

The same exact code is working with Bert, However, I am loading bert with:

self.bert = BertModel.from_pretrained(model_name)

_, pooled_output = self.bert(input_ids= input_id, attention_mask=mask,return_dict=False)

I checked the tensor of the pooling layer of bert, and it was different, it was way more than 4 lists inside the main list.