I am trying to create an "instant cloud flow" on Power Automate.

Context:

Excel file "A" contains a table "X" which gets updated regularly with new rows at the bottom containing new information.

Excel file "B" contains a table "Y" with the same characteristics, number of columns, headers etc. Except for the number of rows since the table "X" is being updated regularly with new rows of data.

Both files are stored on OneDrive cloud and will possibly move into Sharepoint file storage, so they will be in the cloud, not stored locally on any device.

Challenge/need:

- I need table "Y", within file "B", to mirror the changes happening on table "X" from file "A". Specifically the new rows of data being added to table "X":

Internet/world > New rows of data at the bottom of Table "X" of file "A" > These same new rows get copied into also the bottom of Table "Y" of file "B". Basically both tables, "X" and "Y" need to stay exactly the same with a maximum interval of 3 minutes.

Solution tried:

- I tried a flow which gets triggered every minute. In this flow, I tried creating an array containing the new rows of data added to table "X". Then using the

Apply to eachcontrol with the values from this new array, I tried the actionsAdd a row into a table, followed byUpdate a rowfor each item inside this array. Keeping in this way table "Y" updated as per table "X". This part works, rows are added and updated on table "Y".

My problem:

- The

Conditionthat compares the data from the 2 tables, decides that all rows from table "X" are new data, even though some are already present in table "Y". This is a problem because too many rows are added to table "Y" and the tables become out of sync due to the difference in the number of rows/body length. In my understanding, this happens because an item/object is generated byList rows present in a tablecalledItemInternalId.

This ItemInternalId generates different id numbers for the same rows already updated previously, and because of this, the condition identifies all rows on table "X" as new data to be updated on table "Y".

Questions:

Could someone confirm that this

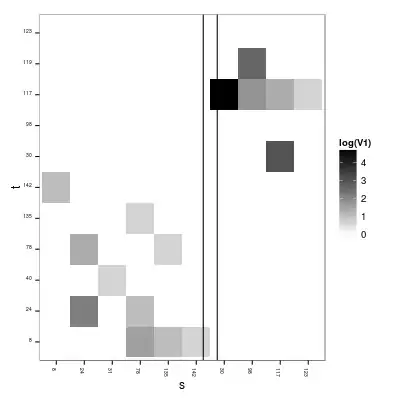

ItemInternalIdis the problem here? I am in doubt because I tried removing this by creating another array using theSelectaction and then proceeded using just the columns/headers I need, excluding this wayItemInternalId. Problem is that the "header" is excluded (which I need), containing only the value, and also the condition proceeds to identify all rows on "X" as new data again anyway...Maybe the problem is that I am doing it wrong and there is another simple, or better way to get an array with the new items from table "X"? Here is the condition that I use to try to feed feed a new Array with the new rows from table "X":

Thank you