I use persist command to cache a dataframe on MEMORY_AND_DISK and have been observing a weird pattern.

A persisted dataframe is cached to 100% when that specific job (Job 6, in the below screenshot) which does the necessary transformations is complete, but post Job 9 (data quality check) it dropped the fraction cached to 55% which made it to recompute to get the partially lost data (can be seen in Job 12). I have also seen from the metrics (Ganglia UI on Databricks) that at any given instance there was at least 50 GB of memory available.

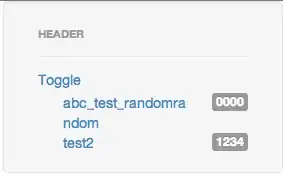

(Below image is partially masked to avoid exposure of sensitive data)

Why would Spark discard/flush an object of 50 MB persisting on memory/disk when there is enough memory for the other transformations/actions? Is there a solution to avoid this apart from a workaround of writing it to a temporary storage explicitly?