When studying the literature on physics engines, I've noticed that almost every physics engine uses semi-implicit Euler. The basic implementation of this uses the following two equations:

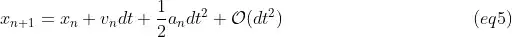

However since we have the second order derivative information of the position anyways, why don't we use a Taylor expansion? This would result in the following two equations:

If we compare these two sets of equations (subs eq1 in eq2), then you can see that we actually have an order difference:

To verify a bit whether an implementation like this would be possible, I've also quickly skipped through some of the integrators source code of MuJoCo, in which I did not see an immediate drawback of actually implementing this method (only a rather small extra computation cost for separately adding that extra term).

So my question remains: Why are physics engines not using a Taylor expansion for the position?