I am a hobbiest so excuse my question if it might be too basic.

For a test I am currently trying to recreate a camera that I created in 3D looking at a plane with 4 variable points. The camera has a value of:

Tx 53

TY 28

Tz 69

Rx -5

Ry 42

Rz 0

with a focal length of 100

- My first question here is. In Maya the up axis is Y, is this the same when calculating with openCV or is the up axis Z?

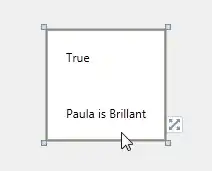

The look through the camera is the following:

The image I want to create the camera from looks like this and is called "cameraView.jpg":

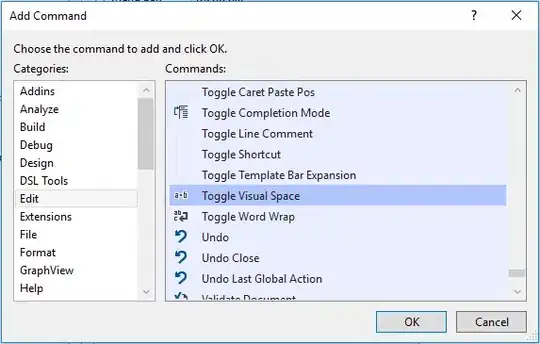

This is the code I want to recreate the camera with:

import cv2

import numpy as np

import math

def focalLength_to_camera_matrix(focalLenght, image_size):

w, h = image_size[0], image_size[1]

K = np.array([

[focalLenght, 0, w/2],

[0, focalLenght, h/2],

[0, 0, 1],

])

return K

def rot_params_rv(rvecs):

R = cv2.Rodrigues(rvecs)[0]

roll = 180*math.atan2(-R[2][1], R[2][2])/math.pi

pitch = 180*math.asin(R[2][0])/math.pi

yaw = 180*math.atan2(-R[1][0], R[0][0])/math.pi

rot_params= [roll,pitch,yaw]

return rot_params

# Read Image

im = cv2.imread("assets/cameraView.jpg");

size = im.shape

imageWidth = size[1]

imageHeight = size[0]

imageSize = [imageWidth, imageHeight]

points_2D = np.array([

(750.393882, 583.560379),

(1409.44155, 593.845944),

(788.196876, 1289.485585),

(1136.729733, 1317.203244)

], dtype="double")

points_3D = np.array([

(-4.220791, 25.050909, 9.404016),

(4.163141, 25.163363, 9.5773660),

(-2.268313, 18.471558, 10.948839),

(2.109119, 18.56548, 10.945459)

])

focal_length = 100

cameraMatrix = focalLength_to_camera_matrix(focal_length, imageSize)

distCoeffs = np.zeros((5,1))

success, rvecs, tvecs = cv2.solvePnP(points_3D, points_2D, cameraMatrix, distCoeffs, flags=0)

rot_vals = rot_params_rv(rvecs)

print("Transformation Vectors")

print (tvecs)

print("")

print("Rotation Vectors")

print (rvecs)

print("")

print("Rotation Values")

print (rot_vals)

print("")

I am still confused in how I would get the correct Rotation and Transformation Values from the Vectors I got from cv2.solvePnP. I looked up the problem and got to the rot_params_rv(rvecs) function that somebody posted here. But it is not giving me the correct camera Position.

- So my second question would be how I could get the correct Rotation and Transformation Values from the Rotation and Transformation Vectors.

Am I missing a step?

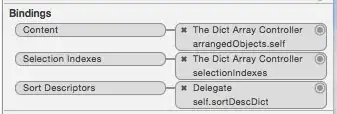

When putting in the values as a new camera in my 3D application it looks like this:

The green is the CameraPosition I get from solvePnp and probably the wrong vector transform, the Camera on the right is the correct position how it should be.

- My third question is where I could maybe take a look into the solvePnP function?(best in python) because right now it is a bit of a black box to me I am afraid.

Thank you very much for helping me.