Hi I'm training my pytorch model on remote server.

All the job is managed by slurm.

My problem is 'training is extremely slower after training first epoch.'

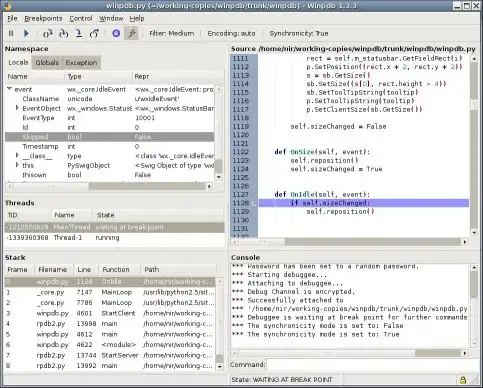

I checked gpu utilization.

On my first epoch, utilization was like below image.

I can see gpu was utilized.

But from second epoch utilized percentage is almos zero

My dataloader code like this

class img2selfie_dataset(Dataset):

def __init__(self, path, transform, csv_file, cap_vec):

self.path = path

self.transformer = transform

self.images = [path + item for item in list(csv_file['file_name'])]

self.smiles_list = cap_vec

def __getitem__(self, idx):

img = Image.open(self.images[idx])

img = self.transformer(img)

label = self.smiles_list[idx]

label = torch.Tensor(label)

return img, label.type(torch.LongTensor)

def __len__(self):

return len(self.images)

My dataloader is defined like this

train_data_set = img2selfie_dataset(train_path, preprocess, train_dataset, train_cap_vec)

train_loader = DataLoader(train_data_set, batch_size = 256, num_workers = 2, pin_memory = True)

val_data_set = img2selfie_dataset(train_path, preprocess, val_dataset, val_cap_vec)

val_loader = DataLoader(val_data_set, batch_size = 256, num_workers = 2, pin_memory = True)

My training step defined like this

train_loss = []

valid_loss = []

epochs = 20

best_loss = 1e5

for epoch in range(1, epochs + 1):

print('Epoch {}/{}'.format(epoch, epochs))

print('-' * 10)

epoch_train_loss, epoch_valid_loss = train(encoder_model, transformer_decoder, train_loader, val_loader, criterion, optimizer)

train_loss.append(epoch_train_loss)

valid_loss.append(epoch_valid_loss)

if len(valid_loss) > 1:

if valid_loss[-1] < best_loss:

print(f"valid loss on this {epoch} is better than previous one, saving model.....")

torch.save(encoder_model.state_dict(), 'model/encoder_model.pickle')

torch.save(transformer_decoder.state_dict(), 'model/decoder_model.pickle')

best_loss = valid_loss[-1]

print(best_loss)

print(f'Epoch : [{epoch}] Train Loss : [{train_loss[-1]:.5f}], Valid Loss : [{valid_loss[-1]:.5f}]')

In my opinion, if this problem comes from my code. It wouldn't have hitted 100% utilization in first epoch.