As the title says, I want to use HomographyWarper from kornia so that it gives the same output as OpenCV warpPerspective.

import cv2

import numpy as np

import torch

from kornia.geometry.transform import HomographyWarper

from kornia.geometry.conversions import normalize_pixel_coordinates

image_cv = cv2.imread("./COCO_train2014_000000000009.jpg")

image_cv = image_cv[0:256, 0:256]

image = torch.tensor(image_cv).permute(2, 0, 1)

image = image.to('cuda:0')

image_reshaped = image.type(torch.float32).view(1, *image.shape)

height, width, _ = image_cv.shape

homography_0_1 = torch.tensor([[ 7.8783e-01, 3.6705e-02, 2.5139e+02],

[ 1.6186e-02, 1.0893e+00, -2.7614e+01],

[-4.3304e-04, 7.6681e-04, 1.0000e+00]], device='cuda:0',

dtype=torch.float64)

homography_0_2 = torch.tensor([[ 7.8938e-01, 3.5727e-02, 1.5221e+02],

[ 1.8347e-02, 1.0921e+00, -2.8547e+01],

[-4.3172e-04, 7.7596e-04, 1.0000e+00]], device='cuda:0',

dtype=torch.float64)

transform_h1_h2 = torch.linalg.inv(homography_0_1).matmul(

homography_0_2).type(torch.float32).view(1, 3, 3)

homography_warper_1_2 = HomographyWarper(height, width, padding_mode='zeros', normalized_coordinates=True)

warped_image_torch = homography_warper_1_2(image_reshaped, transform_h1_h2)

warped_image_1_2_cv = cv2.warpPerspective(

image_cv,

transform_h1_h2.cpu().numpy().squeeze(),

dsize=(width, height),

borderMode=cv2.BORDER_REFLECT101,

)

cv2.namedWindow("original image")

cv2.imshow("original image", image_cv)

cv2.imshow("OpenCV warp", warped_image_1_2_cv)

cv2.imshow("Korni warp", warped_image_torch.squeeze().permute(1, 2, 0).cpu().numpy())

cv2.waitKey(0)

cv2.destroyAllWindows()

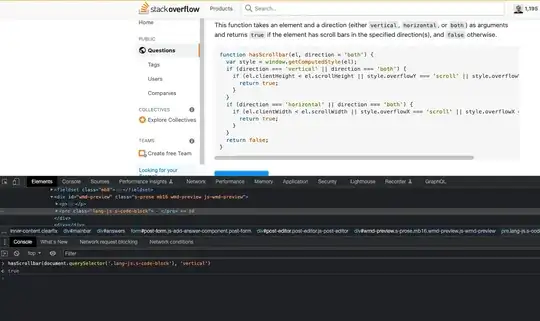

With the code above, I get the following output:

With normalized_coordinates=False, I get the following output:

Apparently the homography transformation is applied differently. I would love to know the difference.