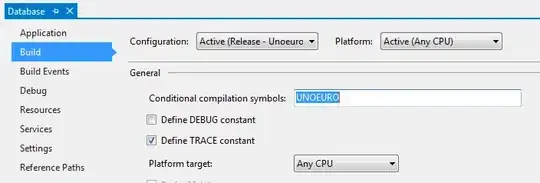

My service works with a topic through 10 consumers. Unmanaged memory grows immediately after adding a new batch of messages to a cluster topic (picture 1). Memory continues to grow even after a long period of inactivity.

When I sent 500k messages to the topic and starting the service, I saw the following:

I determined that is due to the local queue of the consumer, when changing parameters:

QueuedMinMessages - Minimum number of messages per topic+partition librdkafka tries to maintain in the local consumer queue. (Default: 100000; My value: 100)

QueuedMaxMessagesKbytes - Maximum number of kilobytes of queued pre-fetched messages in the local consumer queue. If using the high-level consumer this setting applies to the single consumer queue, regardless of the number of partitions. When using the legacy simple consumer or when separate partition queues are used this setting applies per partition. This value may be overshot by fetch.message.max.bytes. This property has higher priority than queued.min.messages. (Default: 65536; My value: 30000)

After changing these parameters and restart service (500k messages remain in topic):

Reducing values of these parameters only increased the memory fill time, but did not solve the problem of leak. For some reason, the local kafka queue is not being cleared of processed messages.

Сonsumer code:

private async Task StartConsumer(CancellationToken stoppingToken)

{

try

{

using (var consumer = new ConsumerBuilder<string, string>(_consumerConfig)

.SetErrorHandler((_, e) => _logger.LogError($"Error: {e.Reason}"))

.Build())

{

consumer.Subscribe(_topicName);

while (!stoppingToken.IsCancellationRequested)

{

ConsumeResult<string, string> result = null;

try

{

result = consumer.Consume();

if (result == null) continue;

var message = result.Message.Value;

Console.WriteLine($"Consumed message '{message}' at '{result.TopicPartitionOffset}'");

if (message != null)

{

T deserializedMessage = JsonConvert.DeserializeObject<T>(message);

if (deserializedMessage != null)

{

var handler = await _managerFactory.CreateHandler(_topicName);

await handler.HandleAsync(deserializedMessage, _topicName);

}

}

else

{

_logger.LogInformation("Processed empty message from Kafka");

}

_logger.LogInformation($"Processed message from Kafka");

consumer.Commit(result);

}

catch (OracleException ex)

{

_logger.LogError(ex, "OracleException" + '\n' + ex.Message + '\n' + ex.InnerException);

ProcessFailureMessage(result.Message);

}

catch (ConsumeException ex)

{

_logger.LogError(ex, "ConsumerException" + '\n' + ex.Message + '\n' + ex.InnerException);

}

}

catch (Exception ex)

{

_logger.LogError(ex, "Exception" + '\n' + ex.Message + '\n' + ex.InnerException);

}

}

}

}

catch (Exception ex)

{

_logger.LogError(ex, "Kafka connection error");

}

}

Consumer config:

"RequestTimeoutMs": 60000,

"TransactionTimeoutMs": 300000,

"SessionTimeoutMs": 300000,

"EnableAutoCommit": false,

"QueuedMinMessages": 100,

"QueuedMaxMessagesKbytes": 30000,

"AutoOffsetReset": "Earliest",

"AllowAutoCreateTopics": true,

"PartitionAssignmentStrategy": "RoundRobin"

confluent-kafka-dotnet version 1.9.3

UPD 1: StartConsumer() calling like long running task:

protected override Task ExecuteAsync(CancellationToken stoppingToken)

{

for (int i = 0; i < _consumersCount; i++)

{

Task.Factory.StartNew(() => StartConsumer(stoppingToken),

stoppingToken, TaskCreationOptions.LongRunning, TaskScheduler.Default);

}

return Task.CompletedTask;

}