In the example code below, I am trying to create case class objects with default values using runtime Scala reflection (required for my use case)!

First Approach

- Define default values for case class fields

- Create objects at runtime

Second Approach

- Create a case class object in the companion object

- Fetch that object using reflection

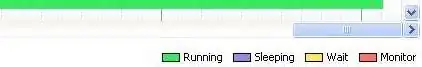

At first glance, the second approach seemed better because we are creating object only once but upon profiling these two approaches, the second doesn't seem to add much value. Although while sampling only one object is created indeed throughout the runtime of the application! Though it looks obvious that those objects are being created every time when using reflection (Correct me if I am wrong).

object TestDefault extends App {

case class XYZ(str: String = "Shivam")

object XYZ { private val default: XYZ = XYZ() }

case class ABC(int: Int = 99)

object ABC { private val default: ABC = ABC() }

def newDefault[A](implicit t: reflect.ClassTag[A]): A = {

import reflect.runtime.{universe => ru}

import reflect.runtime.{currentMirror => cm}

val clazz = cm.classSymbol(t.runtimeClass)

val mod = clazz.companion.asModule

val im = cm.reflect(cm.reflectModule(mod).instance)

val ts = im.symbol.typeSignature

val mApply = ts.member(ru.TermName("apply")).asMethod

val syms = mApply.paramLists.flatten

val args = syms.zipWithIndex.map {

case (p, i) =>

val mDef = ts.member(ru.TermName(s"apply$$default$$${i + 1}")).asMethod

im.reflectMethod(mDef)()

}

im.reflectMethod(mApply)(args: _*).asInstanceOf[A]

}

for (i <- 0 to 1000000000)

newDefault[XYZ]

// println(s"newDefault XYZ = ${newDefault[XYZ]}")

// println(s"newDefault ABC = ${newDefault[ABC]}")

def newDefault2[A](implicit t: reflect.ClassTag[A]): A = {

import reflect.runtime.{currentMirror => cm}

val clazz = cm.classSymbol(t.runtimeClass)

val mod = clazz.companion.asModule

val im = cm.reflect(cm.reflectModule(mod).instance)

val ts = im.symbol.typeSignature

val defaultMember = ts.members.filter(_.isMethod).filter(d => d.name.toString == "default").head.asMethod

val result = im.reflectMethod(defaultMember).apply()

result.asInstanceOf[A]

}

for (i <- 0 to 1000000000)

newDefault2[XYZ]

}

Is there any way to reduce the memory footprint? Any other better approach to achieve the same?

P.S. If are trying to run this app, comment the following lines alternatively:

for (i <- 0 to 1000000000)

newDefault[XYZ]

for (i <- 0 to 1000000000)

newDefault2[XYZ]

EDIT

As per @Levi Ramsey's suggestion, I did try memoization but it seems to only make a small difference!

val cache = new ConcurrentHashMap[universe.Type, XYZ]()

def newDefault2[A](implicit t: reflect.ClassTag[A]): A = {

import reflect.runtime.{currentMirror => cm}

val clazz = cm.classSymbol(t.runtimeClass)

val mod = clazz.companion.asModule

val im = cm.reflect(cm.reflectModule(mod).instance)

val ts = im.symbol.typeSignature

if (!cache.contains(ts)) {

val default = ts.members.filter(_.isMethod).filter(d => d.name.toString == "default").head.asMethod

cache.put(ts, im.reflectMethod(default).apply().asInstanceOf[XYZ])

}

cache.get(ts).asInstanceOf[A]

}

for (i <- 0 to 1000000000)

newDefault2[XYZ]