Everyday I try to delete and re-create the dynamoDB table, the code successfully deletes the table, but the other job fails to transfer data from Redshift to DynamoDB.

The first AWS Glue job, I try to delete the table, then re-create it:

import boto3

table_name = 'VENDOR_INFO_GLUE'

client = boto3.client('dynamodb')

response = client.delete_table(

TableName=table_name

)

waiter = client.get_waiter('table_not_exists')

waiter.wait(TableName= table_name)

print ("table deleted")

response = client.create_table(

AttributeDefinitions=[

{

'AttributeName': 'vendor_code',

'AttributeType': 'S'

},

{

'AttributeName': 'uuid',

'AttributeType': 'S'

},

{

'AttributeName': 'owning_buyer',

'AttributeType': 'S'

},

],

TableName= table_name,

KeySchema=[

{

'AttributeName': 'vendor_code',

'KeyType': 'HASH'

},

{

'AttributeName': 'uuid',

'KeyType': 'RANGE'

},

],

GlobalSecondaryIndexes=[

{

'IndexName': 'owning_buyer-index',

'KeySchema': [

{

'AttributeName': 'owning_buyer',

'KeyType': 'HASH'

},

],

'Projection': {

'ProjectionType': 'ALL'

}

},

],

BillingMode='PAY_PER_REQUEST'

)

waiter = client.get_waiter('table_exists')

waiter.wait(TableName= table_name)

print ("table created")

This job works fine, but then the other job works:

The second job actually doesn't receive errors, but there's no data in Dynamodb Table. I'm not sure if it's related to write.percent, or the time interval between two jobs. I tried to run the jobs where at least there is one hour time interval between two. But I'm still not sure why this is happening.

One another thing I noticed, when I re-create the second job manually again, the second job runs and there's data in dynamodb. But I'm not sure if it's related to my situation.

import sys

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

from awsglue import DynamicFrame

def sparkSqlQuery(glueContext, query, mapping, transformation_ctx) -> DynamicFrame:

for alias, frame in mapping.items():

frame.toDF().createOrReplaceTempView(alias)

result = spark.sql(query)

return DynamicFrame.fromDF(result, glueContext, transformation_ctx)

args = getResolvedOptions(sys.argv, ["JOB_NAME"])

sc = SparkContext()

glueContext = GlueContext(sc)

spark = glueContext.spark_session

job = Job(glueContext)

job.init(args["JOB_NAME"], args)

# Script generated for node Redshift Cluster

RedshiftCluster_node1 = glueContext.create_dynamic_frame.from_catalog(

database="bba",

redshift_tmp_dir=args["TempDir"],

table_name="ddl_tr_vendors",

transformation_ctx="RedshiftCluster_node1",

)

# Script generated for node SQL

SqlQuery0 = """

select * from vendors

WHERE snapshot_day = (SELECT MAX(snapshot_day) FROM vendors)

"""

SQL_node1660806425146 = sparkSqlQuery(

glueContext,

query=SqlQuery0,

mapping={"tr_vendors": RedshiftCluster_node1},

transformation_ctx="SQL_node1660806425146",

)

# Script generated for node ApplyMapping

ApplyMapping_node2 = ApplyMapping.apply(

frame=SQL_node1660806425146,

mappings=[

("owning_buyer", "string", "owning_buyer", "string"),

("vendor_code", "string", "vendor_code", "string"),

("is_merchandising_vendor", "string", "is_merchandising_vendor", "string"),

("week", "int", "week", "int"),

("year", "int", "year", "int"),

(

"is_merchandise_ordering_active",

"string",

"is_merchandise_ordering_active",

"string",

),

],

transformation_ctx="ApplyMapping_node2",

)

# Script generated for node DynamoDB bucket

Datasink1 = glueContext.write_dynamic_frame_from_options(

frame=ApplyMapping_node2,

connection_type="dynamodb",

connection_options={

"dynamodb.output.tableName": "VENDOR_INFO_GLUE",

"dynamodb.throughput.write.percent": "1.0"

}

)

job.commit()

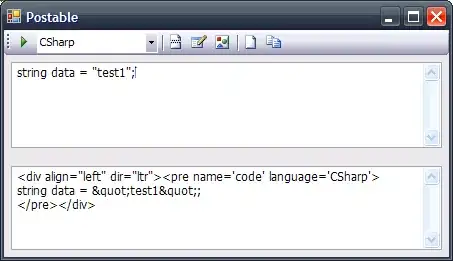

And this is read usage metric when the second job works: