SHORT VERSION: Performance metrics of the memcpy that gets pulled from the GNU ARM toolchain seem to vary wildly on ARM Cortex-M7 for different copy sizes, even though the code that copies the data always stays the same. What could be the cause of this?

LONG VERSION:

I am part of a team developing on stm32f765 microcontroller with GNU arm toolchain 11.2, linking newlib-nano implementation of the stdlib into our code.

Recently, memcpy performace became a bottleneck in our project, and we discovered that memcpy implementation that gets pulled into our code from the newlib-nano was a simple byte-wise copy, which in hindsight should not have been surprising given the fact that the newlib-nano library is code-size optimized (compiled with -Os).

Looking at the source code of the cygwin-newlib, I've managed to track down the exact memcpy implementation that gets compiled and packaged with the nano library for ARMv7m:

void *

__inhibit_loop_to_libcall

memcpy (void *__restrict dst0,

const void *__restrict src0,

size_t len0)

{

#if defined(PREFER_SIZE_OVER_SPEED) || defined(__OPTIMIZE_SIZE__)

char *dst = (char *) dst0;

char *src = (char *) src0;

void *save = dst0;

while (len0--)

{

*dst++ = *src++;

}

return save;

#else

(...)

#endif

We have decided to replace the newlib-nano memcpy implementation in our code with our own memcpy implementation, while sticking to newlib-nano for other reasons. In the process, we decided to get some performance metrics to compare the new implementation with the old one.

However, making sense of the obtained metrics prooved to be a challenge for me.

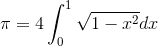

All the results in the table are cycle counts, obtained from reading DWT-CYCCNT values (more info on the actual measurement setup will be given below).

In the table, 3 different memcpy implementations were compared. The first one is the default one that gets linked from the newlib-nano library, as suggested by the label memcpy_nano. The second and third one are the most naive, dumbest data copy implementations in C, one that copies data byte per byte, and the other one that does it word per word:

memcpy_naive_bytewise(void *restrict dest, void *restrict src, size_t size)

{

uint8_t *restrict u8_src = src,

*restrict u8_dest = dest;

for (size_t idx = 0; idx < size; idx++) {

*u8_dest++ = *u8_src++;

}

return dest;

}

void *

memcpy_naive_wordwise(void *restrict dest, void *restrict src, size_t size)

{

uintptr_t upt_dest = (uintptr_t)dest;

uint8_t *restrict u8_dest = dest,

*restrict u8_src = src;

while (upt_dest++ & !ALIGN_MASK) {

*u8_dest++ = *u8_src++;

size--;

}

word *restrict word_dest = (void *)u8_dest,

*restrict word_src = (void *)u8_src;

while (size >= sizeof *word_dest) {

*word_dest++ = *word_src++;

size -= sizeof *word_dest;

}

u8_dest = (void *)word_dest;

u8_src = (void *)word_src;

while (size--) {

*u8_dest++ = *u8_src++;

}

return dest;

}

I am unable, for the life in me, figure out why does the performance of the memcpy_nano resemble the one of the naive word-per-word copy implementation at first (up until the 256 byte-sized copies), only to start resembling the performance of the naive byte-per-byte copy implementation from 256 byte-sized copies and upwards.

I have triple-checked that indeed, the expected memcpy implementation is linked with my code for every copy size that was measured. For example, this is the memcpy disassembly obtained for code measuring the performance of 16 byte-size memcpy vs 256 byte-size copy (where the discrepency first arises):

- memcpy definition linked for the 16 byte-sized copy (newlib-nano memcpy):

08007a74 <memcpy>:

8007a74: 440a add r2, r1

8007a76: 4291 cmp r1, r2

8007a78: f100 33ff add.w r3, r0, #4294967295

8007a7c: d100 bne.n 8007a80 <memcpy+0xc>

8007a7e: 4770 bx lr

8007a80: b510 push {r4, lr}

8007a82: f811 4b01 ldrb.w r4, [r1], #1

8007a86: f803 4f01 strb.w r4, [r3, #1]!

8007a8a: 4291 cmp r1, r2

8007a8c: d1f9 bne.n 8007a82 <memcpy+0xe>

8007a8e: bd10 pop {r4, pc}

- memcpy definition linked for the 256 byte-sized copy (newlib-nano memcpy):

08007a88 <memcpy>:

8007a88: 440a add r2, r1

8007a8a: 4291 cmp r1, r2

8007a8c: f100 33ff add.w r3, r0, #4294967295

8007a90: d100 bne.n 8007a94 <memcpy+0xc>

8007a92: 4770 bx lr

8007a94: b510 push {r4, lr}

8007a96: f811 4b01 ldrb.w r4, [r1], #1

8007a9a: f803 4f01 strb.w r4, [r3, #1]!

8007a9e: 4291 cmp r1, r2

8007aa0: d1f9 bne.n 8007a96 <memcpy+0xe>

8007aa2: bd10 pop {r4, pc}

As you can see, except for the difference in where the relative address of the function is, there is no change in the actual copy logic.

Measurement setup:

- Ensure memory and instruction caches are disabled, irqs disabled, DWT enabled:

SCB->CSSELR = (0UL << 1) | 0UL; // Level 1 data cache

__DSB();

SCB->CCR &= ~(uint32_t)SCB_CCR_DC_Msk; // disable D-Cache

__DSB();

__ISB();

SCB_DisableICache();

if(DWT->CTRL & DWT_CTRL_NOCYCCNT_Msk)

{

//panic

while(1);

}

/* Enable DWT unit */

CoreDebug->DEMCR |= CoreDebug_DEMCR_TRCENA_Msk;

__DSB();

/* Unlock DWT registers */

DWT->LAR = 0xC5ACCE55;

__DSB();

/* Reset CYCCNT */

DWT->CYCCNT = 0;

/* Enable CYCCNT */

DWT->CTRL |= DWT_CTRL_CYCCNTENA_Msk;

__disable_irq();

__DSB();

__ISB();

- Link one single memcpy version under test to the code, and one byte-size step. Compile the code with

-O0. Then measure the execution time like (note: addresses of au8_dst and au8_src are always aligned):

uint8_t volatile au8_dst[MAX_BYTE_SIZE];

uint8_t volatile au8_src[MAX_BYTE_SIZE];

__DSB();

__ISB();

u32_cyccntStart = DWT->CYCCNT;

__DSB();

__ISB();

memcpy(au8_dst, au8_src, u32_size);

__DSB();

__ISB();

u32_cyccntEnd = DWT->CYCCNT;

__DSB();

__ISB();

*u32_cyccnt = u32_cyccntEnd - u32_cyccntStart;

- Repeat this procedure for every combination of byte-size and memcpy version

Main question How is it possible for the execution time of the newlib-nano memcpy to follow that of a naive word-wise copy implementation up to the byte size of 256 bytes, after which it performs similarly to a naive implementation of a byte-wise copy? Please have in mind that the definition of the newlib-nano memcpy that gets pulled into the code is the same for every byte-size measurement, as demonstrated with the disassembly provided above. Is my measurement setup flawed in some obvious way that I have failed to recognize?

Any thoughts on this would be highly, highly appreciated!