I want to use YOLOv5 pre-trained layer weights as part of a multi-task network. For this I saved the state_dict, loaded my custom model and overwrote the object detection layers (see below). Simply calling model.load_state_dict does not work, since I named my model layers differently. For inference I use a custom detect module.

Problem: Although I use exactly the same weights and input, the results are very different: https://i.stack.imgur.com/T0b8q.jpg

I compared some of the intermediate results from different layers and noticed that they drift apart the further the data move through the network. It seems like the error is due to rounding but both models and input are 32bit.

This is how I saved the original state_dict: In detect.py just before the inference part in the yolov5 project:

torch.save(model.model.state_dict(), 'yolov5m_state_dict.pt')

And in my own project using a different environment I define my model like so (the other task heads have been omitted):

class YOLOPointMFull(nn.Module):

def __init__(self, inp_ch=3, nc=80, anchors=anchors):

super(YOLOPointMFull, self).__init__()

# CSPNet Backbone

self.Conv1 = Conv(inp_ch, 48, 6, 2, 2) # ch_in, ch_out, kernel, stride, padding, groups

self.Conv2 = Conv(48, 96, 3, 2)

self.Bottleneck1 = C3(96, 96, 2) # ch_in, ch_out, number

self.Conv3 = Conv(96, 192, 3, 2)

self.Bottleneck2 = C3(192, 192, 4)

self.Conv4 = Conv(192, 384, 3, 2)

self.Bottleneck3 = C3(384, 384, 6)

self.Conv5 = Conv(384, 768, 3, 2)

self.Bottleneck4 = C3(768, 768, 2)

self.SPPooling = SPPF(768, 768, 5)

# Object Detector Head

self.Conv6 = Conv(768, 384, 1, 1, 0)

# ups, cat

self.Bottleneck5 = C3(768, 384, 2)

self.Conv7 = Conv(384, 192, 1, 1, 0)

# ups, cat

self.Bottleneck6 = C3(384, 192, 2) # --> detect

self.Conv8 = Conv(192, 192, 3, 2, 1)

# cat

self.Bottleneck7 = C3(384, 384, 2) # --> detect

self.Conv9 = Conv(384, 384, 3, 2, 1)

# cat

self.Bottleneck8 = C3(768, 768, 2) # --> detect

self.Detect = Detect(nc, anchors=anchors, ch=(192, 384, 768))

self.ups = nn.Upsample(scale_factor=(2, 2), mode='nearest')

def forward(self, x):

# Backbone

x = self.Conv1(x)

x = self.Conv2(x) # check

x = self.Bottleneck1(x)

x = self.Conv3(x)

xb = self.Bottleneck2(x)

x = self.Conv4(xb)

xc = self.Bottleneck3(x)

x = self.Conv5(xc)

x = self.Bottleneck4(x)

x = self.SPPooling(x)

# Object Detector Head

xd = self.Conv6(x)

x = self.ups(xd)

x = torch.cat((x, xc), dim=1)

x = self.Bottleneck5(x)

xe = self.Conv7(x)

x = self.ups(xe)

x = torch.cat((x, xb), dim=1)

xf = self.Bottleneck6(x)

x = self.Conv8(xf)

x = torch.cat((x, xe), dim=1)

xg = self.Bottleneck7(x)

x = self.Conv9(xg)

x = torch.cat((x, xd), dim=1)

x = self.Bottleneck8(x)

x = self.Detect([xf, xg, x])

return x

With this method I overwrote the weights of the network to work around the fact that both state_dicts have different keys:

yolo_sd = torch.load('yolov5m.pt')

# load model with default anchors, input channels and classes

model = load_model('Model')

yp_full_sd = model.state_dict()

def load_pretrained(target, source):

print(len(target), len(source))

for i, (tk, sk) in enumerate(zip(target, source)):

layer_target = tk.split('.')[-1]

layer_source = sk.split('.')[-1]

if layer_source == layer_target and source[sk].shape == target[tk].shape:

target[tk] = source[sk]

else:

print(f'Failed to overwrite {tk}')

return target

yp_full_sd = load_pretrained(yp_full_sd, yolo_sd)

torch.save({"model_state_dict": yp_full_sd,}, 'logs/full_model/yolo_pure.pt')

Here are some things that I have already checked / additional info:

- confirmed that the input is exactly the same (RGB, dimensions, float32, pixel values), no further preprocessing

- saved weights in different formats (.pt, .pth.tar) with same result

- the state_dict was from the unfused yolo model (i.e. BN and Conv layers are separate)

- the model is set to eval mode during inference

- both models ran on the same gpu

- the non-maximum suppression parameters are the same

- my conda project environment uses: torch 1.11.0, pytorch 1.12.1 (why do I have both?) python 3.8.13, numpy 1.23.1, opencv-python 4.5.5.64

- my conda yolov5 environment uses: torch 1.10.0+cu113, python 3.8.0, opencv-python 4.5.4.58

Edit:

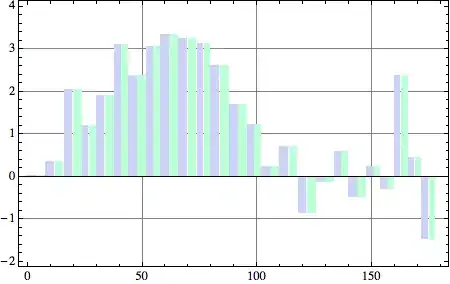

While trying to create a minimal reproducible example by using torch.ones(1, 3, 256, 256, dtype=torch.float) as input I was able to narrow down the error: The output in fact only begins to diverge after the first C3 module (see YOLOv5 architecture) or to be more exact, after (both of) the ConvBNSiLU layers of the C3 module.

The mean absolute difference of the tensors when they first diverge is just 9.7185235e-05 which is small enough to be a rounding error.

To double-check, I confirmed that the weights of the Conv layers of either implementation are exactly the same.

Below is a screenshot of the top left corner of the first layer of the output difference if that helps: