I have a threading issue under windows.

I am developing a program that runs complex physical simulations for different conditions. Say a condition per hour of the year, would be 8760 simulations. I am grouping those simulations per thread such that each thread runs a for loop of 273 simulations (on average)

I bought an AMD ryzen 9 5950x with 16 cores (32 threads) for this task. On Linux, all the threads seem to be between 98% to 100% usage, while under windows I get this:

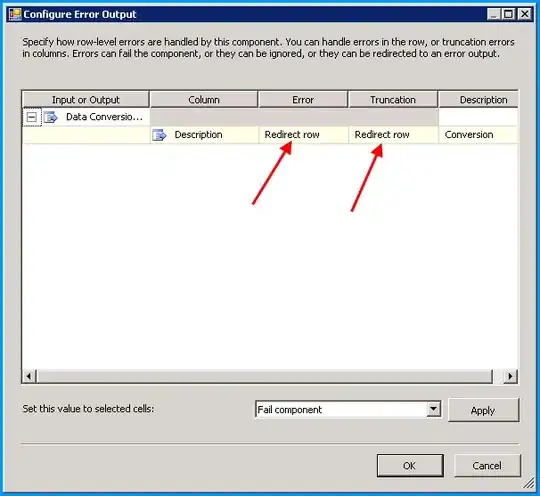

(The first bar is the I/O thread reading data, the smaller bars are the process threads. Red: synchronization, green: process, purple: I/O)

(The first bar is the I/O thread reading data, the smaller bars are the process threads. Red: synchronization, green: process, purple: I/O)

This is from Visual Studio's concurrency visualizer, which tells me that 63% of the time was spent on thread synchronization. As far as I can tell, my code is the same for both the Linux and windows executions.

I made my best to make the objects immutable to avoid issues and that provided a big gain with my old 8-thread intel i7. However with many more threads, this issue arises.

For threading, I have tried a custom parallel for, and the taskflow library. Both perform identically for what I want to do.

Is there something fundamental about windows threads that produces this behaviour?

The custom parallel for code:

/**

* parallel for

* @tparam Index integer type

* @tparam Callable function type

* @param start start index of the loop

* @param end final +1 index of the loop

* @param func function to evaluate

* @param nb_threads number of threads, if zero, it is determined automatically

*/

template<typename Index, typename Callable>

static void ParallelFor(Index start, Index end, Callable func, unsigned nb_threads=0) {

// Estimate number of threads in the pool

if (nb_threads == 0) nb_threads = getThreadNumber();

// Size of a slice for the range functions

Index n = end - start + 1;

Index slice = (Index) std::round(n / static_cast<double> (nb_threads));

slice = std::max(slice, Index(1));

// [Helper] Inner loop

auto launchRange = [&func] (int k1, int k2) {

for (Index k = k1; k < k2; k++) {

func(k);

}

};

// Create pool and launch jobs

std::vector<std::thread> pool;

pool.reserve(nb_threads);

Index i1 = start;

Index i2 = std::min(start + slice, end);

for (unsigned i = 0; i + 1 < nb_threads && i1 < end; ++i) {

pool.emplace_back(launchRange, i1, i2);

i1 = i2;

i2 = std::min(i2 + slice, end);

}

if (i1 < end) {

pool.emplace_back(launchRange, i1, end);

}

// Wait for jobs to finish

for (std::thread &t : pool) {

if (t.joinable()) {

t.join();

}

}

}

A complete C++ project illustrating the issue is uploaded here

Main.cpp:

//

// Created by santi on 26/08/2022.

//

#include "input_data.h"

#include "output_data.h"

#include "random.h"

#include "par_for.h"

void fillA(Matrix& A){

Random rnd;

rnd.setTimeBasedSeed();

for(int i=0; i < A.getRows(); ++i)

for(int j=0; j < A.getRows(); ++j)

A(i, j) = (int) rnd.randInt(0, 1000);

}

void worker(const InputData& input_data,

OutputData& output_data,

const std::vector<int>& time_indices,

int thread_index){

std::cout << "Thread " << thread_index << " [" << time_indices[0]<< ", " << time_indices[time_indices.size() - 1] << "]\n";

for(const int& t: time_indices){

Matrix b = input_data.getAt(t);

Matrix A(input_data.getDim(), input_data.getDim());

fillA(A);

Matrix x = A * b;

output_data.setAt(t, x);

}

}

void process(int time_steps, int dim, int n_threads){

InputData input_data(time_steps, dim);

OutputData output_data(time_steps, dim);

// correct the number of threads

if ( n_threads < 1 ) { n_threads = ( int )getThreadNumber( ); }

// generate indices

std::vector<int> time_indices = arrange<int>(time_steps);

// compute the split of indices per core

std::vector<ParallelChunkData<int>> chunks = prepareParallelChunks(time_indices, n_threads );

// run in parallel

ParallelFor( 0, ( int )chunks.size( ), [ & ]( int k ) {

// run chunk

worker(input_data, output_data, chunks[k].indices, k );

} );

}

int main(){

process(8760, 5000, 0);

return 0;

}