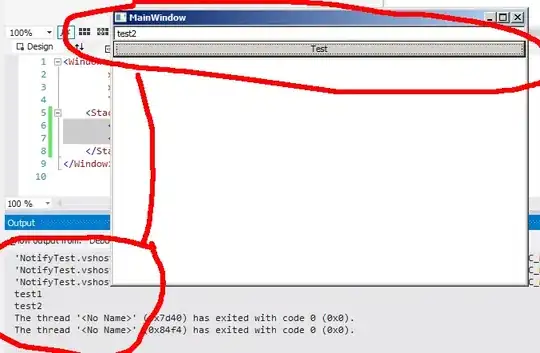

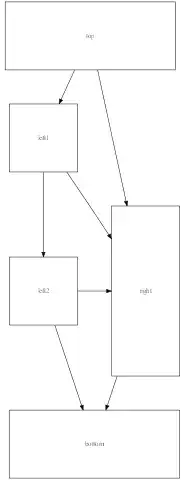

Having a dataset of aligned objects, I would like to augment it by applying random rotations with the axis at the center of the object. Below is the rotation representation (left original, right image rotated around the point (xc, yc). for rotation, I have used the following logic:

import cv2

import random

image_source = cv2.imread('sample.png')

height, width = image_source.shape[:2]

random_angle = random.uniform(90, 90)

yolo_annotation_sample = get_annotation() # this function retrieves yolo annotation

label_id, xc, yc, object_width, object_height = yolo_annotation_sample # e.g. 4, 0.0189, 0.25, 0.0146, 0.00146

center_x = width * xc

center_y = height * yc

left = center_x - (width * object_width) / 2

top = center_y - (height * object_height) / 2

right = left + width * object_width

bottom = top + height * object_height

M = cv2.getRotationMatrix2D((cx, cy), random_angle, 1.0)

image_rotated = cv2.warpAffine(image_source, M, (width, height))

# logic for calculating new point position (doesn't work)

x1_y1 = np.asarray([[left, top]])

x1_y1_new = np.dot(x1_y1, M)

x2_y2 = np.asarray([[right, top]])

x2_y2_new = np.dot(x2_y2, M)

x3_y3 = np.asarray([[right, bottom]])

x3_y3_new = np.dot(x3_y3, M)

x4_y4 = np.asarray([[left, bottom]])

x4_y4_new = np.dot(x4_y4, M)

Does anyone know how to recalculate the point(s) after rotating around the arbitrary point as shown above?