I have a LR pipeline that I train over a dataset and save it. DUring the training, I also test it on X_test and the predicitons look okay. SO I save the model as joblib and load again to do prediction on a data.

The predicitons on new data gives very large prediction and mostly the same for all rows.

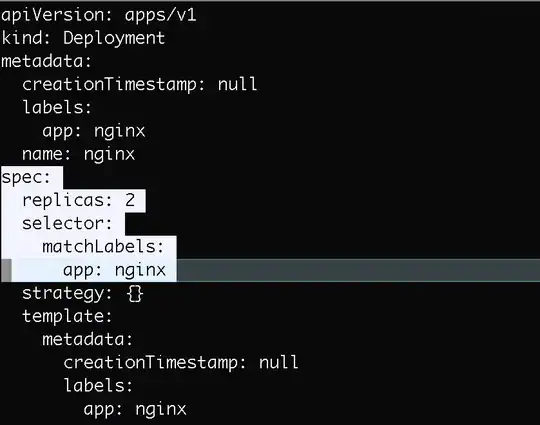

Here is the pipeline:

feature_cleaner = Pipeline(steps=[

("id_col_remover", columnDropperTransformer(id_cols)),

("missing_remover", columnDropperTransformer(miss_cols)),

("nearZero_remover", columnDropperTransformer(nearZero_cols))

])

zero_Setter = Pipeline(steps=[

("zero_imp", ZeroImputer(fill_zero_cols)),

('case_age_month', positiveTransformer(['CASE_AGE_MONTHS']))

])

numeric_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy = "constant", fill_value=-1, add_indicator=True)),

('scaler', StandardScaler())

])

categotical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy = "constant", fill_value='Unknown')),

('scaler', OneHotEncoder(handle_unknown='ignore'))

])

preprocess_ppl = ColumnTransformer(

transformers=[

('numeric', numeric_transformer, make_column_selector(dtype_include=np.number)),

('categorical', categotical_transformer, make_column_selector(dtype_include='category'))

], remainder='drop'

)

steps=[

('zero_imputer', zero_Setter),

('cleaner', feature_cleaner),

("preprocessor", preprocess_ppl),

("estimator", LinearRegression(n_jobs=-1))

]

pipeline = Pipeline(

steps=steps

)

feature_remover just deletes some features, zero_setter replaces NA with zero for some cols, categorical transformer oneHotEncodes the categorical variables in the data and a numeric transformer for numeric handling.

The predictions I make within the same script look okay:

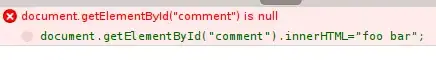

but when I download the joblib (as the training job is on cloud) and run prediction for a subset of data I get predictions that look like this:

I am not sure why is this happening since the data goes throught the same pipeline duriong training and scoring.