I am trying to build a classification of playing cards. The goal is that I use an image of a playing card as input and the script then outputs whether it is a playing card and if so, which one.

For this I have written the following comparison method, which I call with the input image and all comparison images, i.e.:

- Input image vs. 2_C

- Input image vs. 2_H

- Input image vs. 2_D

- [...]

Compare method:

def compare_images(img1, img2):

percent = -1

detector = cv2.SIFT_create()

kp1, des1 = detector.detectAndCompute(img1,None)

kp2, des2 = detector.detectAndCompute(img2,None)

bf = cv2.BFMatcher_create()

matches = bf.knnMatch(des1,des2,k=2)

# Apply ratio test

good = []

for m,n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

a=len(good)

percent=(a*100)/len(kp2)

return percent

It then outputs which playing card matches how well with the input image, but not always reliably..., example:

Input image: 2_C,

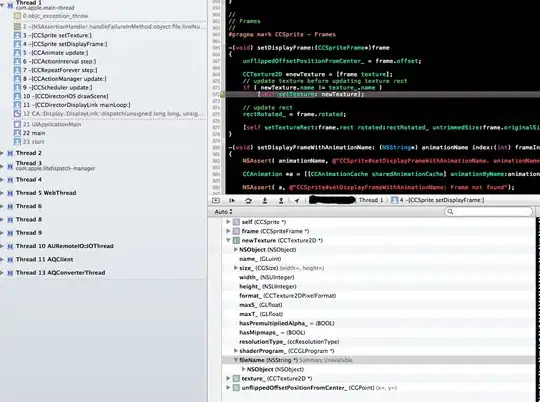

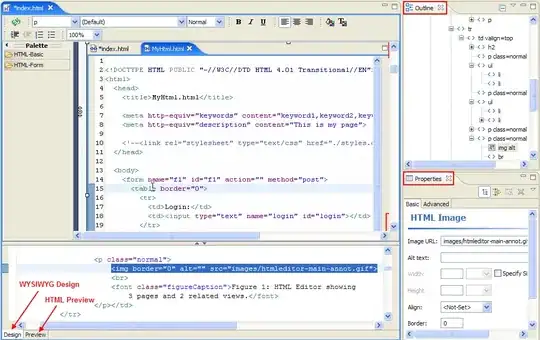

Output of cv2.drawMatchesKnn(img2, kp2, img1, kp1, matches, None):

Output of percent value:

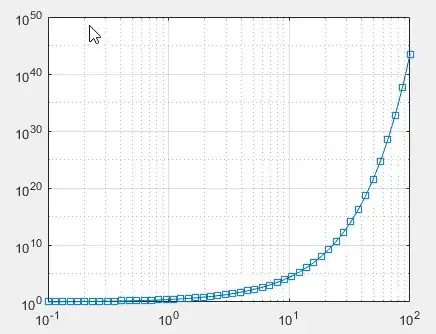

Match of <2_C> & <2_J>: 197.2972972972973

Match of <2_C> & <2_C>: 28.115942028985508

Match of <2_C> & <A_C>: 27.0

Match of <2_C> & <3_H>: 26.344086021505376

Match of <2_C> & <2_S>: 25.28735632183908

Match of <2_C> & <2_D>: 23.529411764705884

Match of <2_C> & <7_H>: 20.95808383233533

Match of <2_C> & <2_H>: 18.807339449541285

Match of <2_C> & <5_H>: 16.80327868852459

Match of <2_C> & <4_H>: 15.104166666666666

Match of <2_C> & <3_C>: 14.031180400890868

Match of <2_C> & <3_S>: 12.546125461254613

[...]

How can I improve the quality of detection?