I'm very very new to Apache-airflow, just started a course in udemy (this course) .

We have recieved YAML file and were asked to follow instructions in order to install airflow. I have been able to install airflow, I beleive, because I have gotten any error while doing the next steps:

- create a new file new_file.env (Visual Studio)

- add these lines inside new file and save it :

AIRFLOW_IMAGE_NAME=apache/airflow:2.3.0

AIRFLOW_UID=50000

- open the terminal and run

docker-compose up -d

Then when I tried to open localhost:8080 nothing was opened.

When I checked the containers, using

docker-compose ps

I have seen that some of the containers are not healthy.

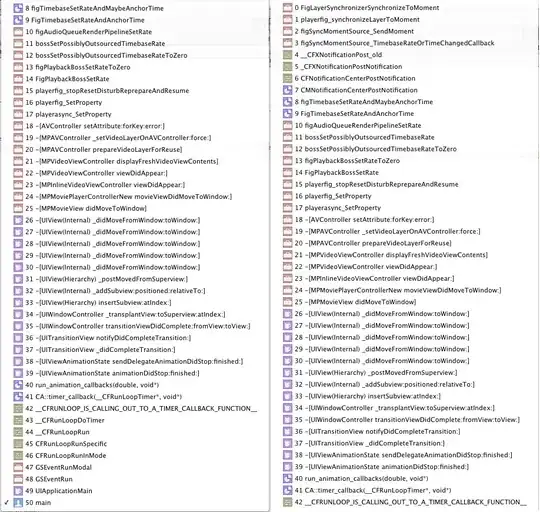

docker logs materials_name_of_the_container #here I inserted every tome different container name

The most common error was "unable to configure handler "processor"

I have seen here post with similar problem that recommened to use pip to install Azure ,and I have tried to print this in the terminal:

pip install 'apache-airflow[azure_blob_storage,azure_data_lake,azure_cosmos,azure_container_instances]

#also tried with one more '

pip install 'apache-airflow[azure_blob_storage,azure_data_lake,azure_cosmos,azure_container_instances]'

I got an error that pip is not recognized:

My goal is to complete the installation , and "on -the-way" to understand why these errors happened. I beleive the the YAML file is well (because it's an organized course), but no idea where or what happenned inside the contaienrs, and where these containers are located, so any "dummy" explaination for beginner is welcome.