Summary

- Yes, we can. But The traffic can be unequally distributed to servers, which makes slightly larger load to servers behind the domain name. It makes inefficient server resource usage.

nscd respects the TTL time at DNS query but the shorter TTL time than 15s seems working like 15s. It's because nscd prunes its cache at least every 15s intervals, which is defined as CACHE_PRUNE_INTERVAL at /nscd/nscd.h- By this

CACHE_PRUNE_INTERVAL, traffic can be unequally distributed to servers behind the domain by DNS round-robin.

- This undistributed can be strengthened by clients using keep-alive.

- This undistributed can be weakened by large number of clients

In detail

Environment

- Centos 7.9

- nscd (GNU libc) 2.17

- locust 2.8.6 with master-worker at several servers. the workers # : 1 ~ 60. the master is only one. each worker lives in its own server.

- A record

test-nscd.apps.com binding to two servers (PM1, PM2). its TTL : 1~60s

- /etc/nscd.conf

#

# /etc/nscd.conf

#

# An example Name Service Cache config file. This file is needed by nscd.

#

# Legal entries are:

#

# logfile <file>

# debug-level <level>

# threads <initial #threads to use>

# max-threads <maximum #threads to use>

# server-user <user to run server as instead of root>

# server-user is ignored if nscd is started with -S parameters

# stat-user <user who is allowed to request statistics>

# reload-count unlimited|<number>

# paranoia <yes|no>

# restart-interval <time in seconds>

#

# enable-cache <service> <yes|no>

# positive-time-to-live <service> <time in seconds>

# negative-time-to-live <service> <time in seconds>

# suggested-size <service> <prime number>

# check-files <service> <yes|no>

# persistent <service> <yes|no>

# shared <service> <yes|no>

# max-db-size <service> <number bytes>

# auto-propagate <service> <yes|no>

#

# Currently supported cache names (services): passwd, group, hosts

#

# logfile /var/log/nscd.log

# threads 6

# max-threads 128

server-user nscd

# stat-user nocpulse

debug-level 0

# reload-count 5

paranoia no

# restart-interval 3600

enable-cache passwd yes

positive-time-to-live passwd 600

negative-time-to-live passwd 20

suggested-size passwd 211

check-files passwd yes

persistent passwd yes

shared passwd yes

max-db-size passwd 33554432

auto-propagate passwd yes

enable-cache group yes

positive-time-to-live group 3600

negative-time-to-live group 60

suggested-size group 211

check-files group yes

persistent group yes

shared group yes

max-db-size group 33554432

auto-propagate group yes

enable-cache hosts yes

positive-time-to-live hosts 300

negative-time-to-live hosts 20

suggested-size hosts 211

check-files hosts yes

persistent hosts yes

shared hosts yes

max-db-size hosts 33554432

What experiments I did

- sending traffic to

test-nscd.apps.com with TTL 1 ~ 60s from 1 locust workers. And checking traffic distributed at PM1, PM2

- sending traffic to

test-nscd.apps.com with TTL 1 from 1 ~ 60 locust workers. And checking traffic distributed at PM1, PM2

- sending traffic to

test-nscd.apps.com with TTL 1 from 1 ~ 60 locust workers using keepalive. And checking traffic distributed at PM1, PM2

The test results

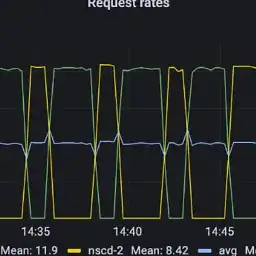

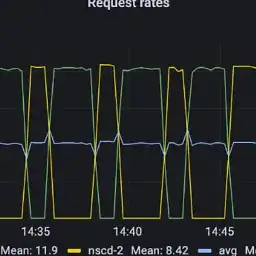

1. sending traffic to test-nscd.apps.com with TTL 1 ~ 60s from 1 locust workers and checking traffic distributed at PM1, PM2

- Traffic are distributed but not equallye.

- You can see the clients(workers) gets dns reply from dns server, every interval 60~75s by using

tcpdump src port 53 -vvv

14:37:55.116675 IP (tos 0x80, ttl 49, id 41538, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.39956: [udp sum ok] 9453 q: A? test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [1m] A 10.130.248.64, test-nscd.apps.com. [1m] A 10.130.248.63 (83)

--

14:39:10.121451 IP (tos 0x80, ttl 49, id 20047, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.55173: [udp sum ok] 6722 q: A? test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [1m] A 10.130.248.63, test-nscd.apps.com. [1m] A 10.130.248.64 (83)

--

14:40:25.120127 IP (tos 0x80, ttl 49, id 28851, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.39461: [udp sum ok] 40481 q: A? test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [1m] A 10.130.248.63, test-nscd.apps.com. [1m] A 10.130.248.64 (83)

--

- Traffic are distributed but not equally because TTL is too large.

- You can see the clients gets dns reply from dns server, every interval 30~45s.

16:14:04.359901 IP (tos 0x80, ttl 49, id 39510, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain >test-client.51466: [udp sum ok] 43607 q: A? test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [5s] A 10.130.248.63, test-nscd.apps.com. [5s] A 10.130.248.64 (83)

--

16:14:19.361964 IP (tos 0x80, ttl 49, id 3196, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain >test-client.39370: [udp sum ok] 62519 q: A? test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [5s] A 10.130.248.63, test-nscd.apps.com. [5s] A 10.130.248.64 (83)

--

16:14:34.364359 IP (tos 0x80, ttl 49, id 27647, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain >test-client.49659: [udp sum ok] 51890 q: A? test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [5s] A 10.130.248.64, test-nscd.apps.com. [5s] A 10.130.248.63 (83)

--

- Traffic are distributed but not equally.

- But the traffic became to be distributed more equally than TTL 45s case.

- You can see the clients gets dns reply from dns server, every interval 15~30s.

15:45:04.141762 IP (tos 0x80, ttl 49, id 30678, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain >test-client.35411: [udp sum ok] 63073 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [15s] A 10.130.248.63,test-nscd.apps.com. [15s] A 10.130.248.64 (83)

--

15:45:34.191159 IP (tos 0x80, ttl 49, id 48496, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain >test-client.52441: [udp sum ok] 24183 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [15s] A 10.130.248.63,test-nscd.apps.com. [15s] A 10.130.248.64 (83)

--

15:46:04.192905 IP (tos 0x80, ttl 49, id 32793, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain >test-client.49875: [udp sum ok] 59065 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [15s] A 10.130.248.63,test-nscd.apps.com. [15s] A 10.130.248.64 (83)

--

- Traffic are distributed but not equally.

- But the traffic became to be distributed more equally than TTL 30s case.

- You can see the clients gets dns reply from dns server, every interval 15s, although TTL is 5s

16:14:04.359901 IP (tos 0x80, ttl 49, id 39510, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.51466: [udp sum ok] 43607 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [5s] A 10.130.248.63,test-nscd.apps.com. [5s] A 10.130.248.64 (83)

--

16:14:19.361964 IP (tos 0x80, ttl 49, id 3196, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.com.39370: [udp sum ok] 62519 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [5s] A 10.130.248.63,test-nscd.apps.com. [5s] A 10.130.248.64 (83)

--

16:14:34.364359 IP (tos 0x80, ttl 49, id 27647, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.com.49659: [udp sum ok] 51890 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [5s] A 10.130.248.64,test-nscd.apps.com. [5s] A 10.130.248.63 (83)

--

- Traffic are distributed but not equally.

- The result is similar with TTL 5s case.

- You can see the clients gets dns reply from dns server, every interval 15s, although TTL is 1s. It's same with TTL 5s case.

16:43:27.814701 IP (tos 0x80, ttl 49, id 28956, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.49891: [udp sum ok] 22634 q: A?test-nscd.apps.com. 2/0/0 test-nscd.apps.com. [1s] A 10.130.248.63,test-nscd.apps.com. [1s] A 10.130.248.64 (83)

--

16:43:42.816721 IP (tos 0x80, ttl 49, id 27128, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.34490: [udp sum ok] 37589 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [1s] A 10.130.248.63,test-nscd.apps.com. [1s] A 10.130.248.64 (83)

--

16:43:57.842106 IP (tos 0x80, ttl 49, id 60723, offset 0, flags [none], proto UDP (17), length 111)

10.230.167.65.domain > test-client.55185: [udp sum ok] 1139 q: A?test-nscd.apps.com. 2/0/0test-nscd.apps.com. [1s] A 10.130.248.63,test-nscd.apps.com. [1s] A 10.130.248.64 (83)

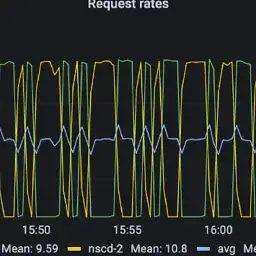

2. sending traffic to test-nscd.apps.com with TTL 1 from 1 ~ 100 locust workers and checking traffic distributed at PM1, PM2

- Increasing the locust workers from 1, 10, 20, 40, 60

- I increase the locust workers every 30 minutes

- I found the traffic became more equally distributed by increasing workers (increasing clients)

- At 60 workers, there was only 3 percent difference between an average traffic RPS, on time average.

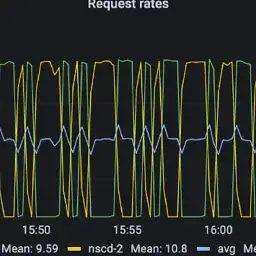

3. sending traffic to test-nscd.apps.com with TTL 1 from 1 ~ 100 locust workers using keepalive and checking traffic distributed at PM1, PM2

- Increasing the locust workers from 1, 10, 20, 40, 60

- I increase the locust workers every 30 minutes

- I found the traffic became more equally distributed by increasing workers (increasing clients)

- At 60 workers, there was only 6 percent difference between an average traffic RPS, on time average.

- The result is not good as much as the experiment 2 due to keepalive's connection caching

4. (Comparison experiment) sending traffic to test-nscd.apps.com which is bound to machine JVM(JVM has its own dns caching). And checking traffic distributed at PM1, PM2

- We found that TTL should be smaller than at least 10s for distributing traffic equally.

Conclusion

nscd respects the TTL time at DNS query. But the shorter TTL than 15s seems working like 15s because nscd prune its cache at least every 15s interval, which is defined as CACHE_PRUNE_INTERVAL at /nscd/nscd.h. You can find this facts from belows.

By this CACHE_PRUNE_INTERVAL, traffic can be unequally distributed to servers behind the domain by DNS round-robin. Compared to the dns caching of JVM, nscd is hard to use DNS round robin.

This undistributed can be strengthened by keep-alive of clients

- it seems keep-alive cache the connections, so it makes less frequent dns queries and more undistributed traffic.

This undistributed can be weakened by large number of clients

- it seems large number of clients makes more frequent and less undistributed traffic.