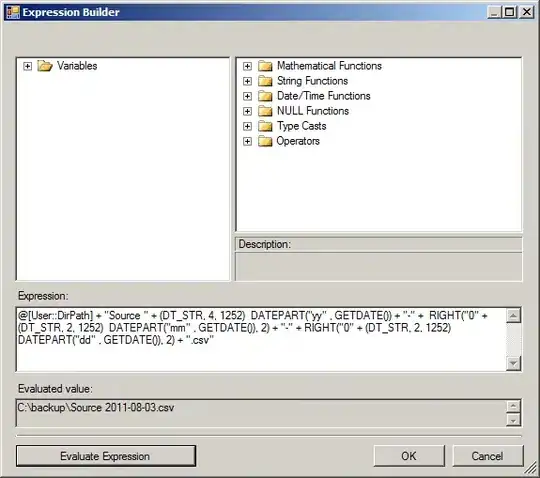

I'm trying to use https://deno.land/x/opencv@v4.3.0-10 to get template matching to work in deno. I heavily based my code on the node example provided, but can't seem to work it out just yet.

By following the source code I first stumbled upon error: Uncaught (in promise) TypeError: Cannot convert "undefined" to int while calling cv.matFromImageData(imageSource).

After experimenting and searching I figured the function expects {data: Uint8ClampedArray, height: number, width: number}. This is based on this SO post and might be incorrect, hence posting it here.

The issue I'm currently faced with is that I don't seem to get proper matches from my template. Only when I set the threshold to 0.1 or lower, I get a match, but this is not correct { xStart: 0, yStart: 0, xEnd: 29, yEnd: 25 }.

I used the images provided by the templateMatching example here.

Haystack

Needle

Needle

Any input/thoughts on this are appreciated.

import { cv } from 'https://deno.land/x/opencv@v4.3.0-10/mod.ts';

export const match = (imagePath: string, templatePath: string) => {

const imageSource = Deno.readFileSync(imagePath);

const imageTemplate = Deno.readFileSync(templatePath);

const src = cv.matFromImageData({ data: imageSource, width: 640, height: 640 });

const templ = cv.matFromImageData({ data: imageTemplate, width: 29, height: 25 });

const processedImage = new cv.Mat();

const logResult = new cv.Mat();

const mask = new cv.Mat();

cv.matchTemplate(src, templ, processedImage, cv.TM_SQDIFF, mask);

cv.log(processedImage, logResult)

console.log(logResult.empty())

};

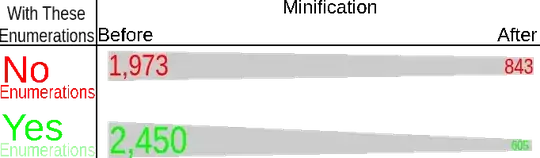

UPDATE

Using @ChristophRackwitz's answer and digging into opencv(js) docs, I managed to get close to my goal.

I decided to step down from taking multiple matches into account, and focused on a single (best) match of my needle in the haystack. Since ultimately this is my use-case anyways.

Going through the code provided in this example and comparing data with the data in my code, I figured something was off with the binary image data which I supplied to cv.matFromImageData. I solved this my properly decoding the png and passing that decoded image's bitmap to cv.matFromImageData.

I used TM_SQDIFF as suggested, and got some great results.

Haystack

Needle

Result

I achieved this in the following way.

import { cv } from 'https://deno.land/x/opencv@v4.3.0-10/mod.ts';

import { Image } from 'https://deno.land/x/imagescript@v1.2.14/mod.ts';

export type Match = false | {

x: number;

y: number;

width: number;

height: number;

center?: {

x: number;

y: number;

};

};

export const match = async (haystackPath: string, needlePath: string, drawOutput = false): Promise<Match> => {

const perfStart = performance.now()

const haystack = await Image.decode(Deno.readFileSync(haystackPath));

const needle = await Image.decode(Deno.readFileSync(needlePath));

const haystackMat = cv.matFromImageData({

data: haystack.bitmap,

width: haystack.width,

height: haystack.height,

});

const needleMat = cv.matFromImageData({

data: needle.bitmap,

width: needle.width,

height: needle.height,

});

const dest = new cv.Mat();

const mask = new cv.Mat();

cv.matchTemplate(haystackMat, needleMat, dest, cv.TM_SQDIFF, mask);

const result = cv.minMaxLoc(dest, mask);

const match: Match = {

x: result.minLoc.x,

y: result.minLoc.y,

width: needleMat.cols,

height: needleMat.rows,

};

match.center = {

x: match.x + (match.width * 0.5),

y: match.y + (match.height * 0.5),

};

if (drawOutput) {

haystack.drawBox(

match.x,

match.y,

match.width,

match.height,

Image.rgbaToColor(255, 0, 0, 255),

);

Deno.writeFileSync(`${haystackPath.replace('.png', '-result.png')}`, await haystack.encode(0));

}

const perfEnd = performance.now()

console.log(`Match took ${perfEnd - perfStart}ms`)

return match.x > 0 || match.y > 0 ? match : false;

};

ISSUE

The remaining issue is that I also get a false match when it should not match anything.

Based on what I know so far, I should be able to solve this using a threshold like so:

cv.threshold(dest, dest, 0.9, 1, cv.THRESH_BINARY);

Adding this line after matchTemplate however makes it indeed so that I no longer get false matches when I don't expect them, but I also no longer get a match when I DO expect them.

Obviously I am missing something on how to work with the cv threshold. Any advice on that?

UPDATE 2

After experimenting and reading some more I managed to get it to work with normalised values like so:

cv.matchTemplate(haystackMat, needleMat, dest, cv.TM_SQDIFF_NORMED, mask);

cv.threshold(dest, dest, 0.01, 1, cv.THRESH_BINARY);

Other than it being normalised it seems to do the trick consistently for me. However, I would still like to know why I cant get it to work without using normalised values. So any input is still appreciated. Will mark this post as solved in a few days to give people the chance to discus the topic some more while it's still relevant.