We have the following situation:

Existing topic with 9 partitions in Kafka contains multiple record types. These are partitioned according to a custom header (key =

null) which is basically a string UUID.Data is consumed via Kstreams, filtered by the type that interests us and repartitioned into a new topic containing only specific record types. The new topic contains 12 partitions and has key=

<original id in header>. The increased partition count is to allow more consumers to process this data.

This is where things seem to get a little weird.

In the original topic, we have millions of the relevant records. In each of the 9 partitions, we see relatively monotonically increasing record times, which is to be expected as the partitions should be assigned relatively randomly due to the high cardinality of the partition key.

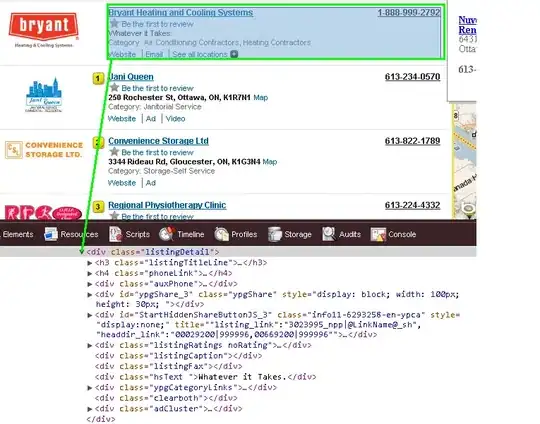

In the new topic, we're seeing something like the following:

Seemingly the record timestamps are jumping all over the place. Some discrepancies are to be expected seeing how the partitioning in the original (as well as the new) topic isn't exactly round-robin. We're seeing a few partitions in our original topic which have offsets that are ~1-2M higher/lower than others, but seeing how we have many millions of records of ingest daily, I can't explain the one record with a time stamp of 5/28/2022 between 6/17/2022 and 6/14/2022.

What could explain this behaviour?

Edit:

Looking at the consumer group offsets, I've found something interesting:

I was reingesting the data with multiple consumers and noted that they have severly different lags per partition. I don't quite understand why this discrepancy would be so large. Going to investigate further...

Edit:

To add some more detail, the workflow of the Streams app is as follows:

SpecificAvroSerde<MyEvent> specificAvroSerde = new SpecificAvroSerde<>();

specificAvroSerde.configure(Collections.singletonMap(AbstractKafkaSchemaSerDeConfig.SCHEMA_REGISTRY_URL_CONFIG, SCHEMA_REGISTRY_URL), /*isKey*/ false);

streamsBuilder

.stream("events", Consumed.with(Serdes.Void(), Serdes.ByteArray()))

.transform(new FilterByTypeHeaderTransformerSupplier(topicProperties))

.transform(new MyEventAvroTransformerSupplier())

.to(topicProperties.getOutputTopic(), Produced.with(Serdes.UUID(), specificAvroSerde));

where the FilterByTypeHeaderTransformerSupplier instantiates a transformer that does, in essence:

public KeyValue<Void, byte[]> transform(Void key, byte[] value) {

// checks record headers

if (matchesFilter()) {

return KeyValue.pair(key, value);

}

// skip since it is not an event type that interests us

return null;

}

while the other transformer does the following (which doesn't have great performance but does the job for now):

public KeyValue<UUID, MyAvroEvent> transform(Void key, byte[] value) {

MyEvent event = objectMapper.readValue(value, MyEvent.class);

MyAvroEvent avroRecord = serializeAsAvro(event);

return KeyValue.pair(event.getEventId(), avroRecord);

}

hence I use the default timestamp extractor (FailOnInvalidTimestamp).

Most notably, as can be seen, I'm adding a key to this record: however, this key is the same one that was previously used to partition the data (in the existing 9 partitions, however).

I'll try removing this key first to see if the behaviour changes, but I'm kind of doubtful that that's the reason, especially since it's the same partition key value that was used previously.

I still haven't found the reason for the wildly differing consumer offsets, unfortunately. I very much hope that I don't have to have a single consumer reprocess this once to catch up, since that would take a very long time...

Edit 2:

I believe I've found the cause of this discrepancy. The original records were produced using Spring Cloud Stream - these records included headers such as e.g "scst_partition=4". However, the hashing the was used for the producer back then used Java based hashing (e.g. "keyAsString".hashCode() % numPartitions), while the Kafka Clients use:

Utils.toPositive(Utils.murmur2(keyAsBytes))

As a result, we're seeing behaviour where records in e.g. source partition 0 could land in any one of the new partitions. Hence, small discrepancies in the source distribution could lead to rather large fluctuations in record ordering in the new partitions.

I'm not quite sure how to deal with this in a sensible manner. Currently I've tried using a simple round-robin partitioning in the target topic to see if the distribution is a bit more even in that case.

The reason why this is a problem is that this data will be put on an object storage via e.g. Kafka Connect. If I want this data stored in e.g. a daily format, then old data coming in all the time would cause buffers that should've been closed a long time ago to be kept open, increasing memory consumption. It doesn't make sense to use any kind of windowing for late data in this case, seeing how it's not a real-time aggregation but simply consumption of historical data.

Ideally for the new partitioning I'd want something like: given the number of partitions in the target topic is a multiple of the number of partitions in the source topic, have records in partition 0 go to either partition 0 or 9, from 1 to either 1 or 10, etc. (perhaps even randomly)

This would require some more work in the form of a custom partitioner, but I can't foresee if this would cause other problems down the line.

I've also tried setting the partition Id header ("kafka_partitionId" - as far as I know, documentation here isn't quite easy to find) but it is seemingly not used.

I'll investigate a bit further...

Final edit:

For what it's worth, the problem boiled down to the following two issues:

- My original data, written by Spring Cloud Stream, was partitioned differently that how a vanilla Kafka Producer (which Kafka Streams internally uses) would. This led to data jumping all over the place from a "record-time" point of view.

- Due to the above, I had to choose a number of partitions that was a multiple of the previous number of partitions as well as use a custom partitioner which does it the "spring cloud stream".

The requirement that the new number be a multiple of the previous one is a result of modular arithmetic. If I wished to have deterministic partitioning for my existing data, having a multiple would allow data to go into one of two possible new partitions as opposed to only one as in the previous case.

E.g. with 9 -> 18 partitions:

- id 1 -> previously hashed to partition 0, now hashes to either 0 or 9 (mod 18)

- id 2 -> previously hashed to partition 1, now hashes to either 1 or 10 (mod 18)

Hence my requirement for higher paralellism is met and the data inside a single partition is ordered as desired, since a target partition is only supplied from at most one source partition.

I'm sure there might have been a simpler way to go about this all, but this works for now.

For further context/info, see also this Q&A.