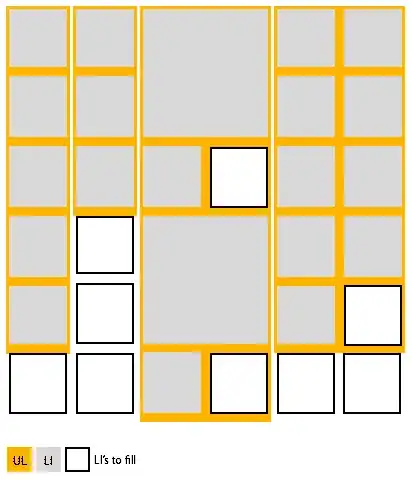

At the moment I am trying to get an idea how to distinguish a character or a number from a simple line. This way I'm trying to filter irrelevant input for Tesseract OCR. My idea is to use connectedComponentsWithStats to get the minimum box around my components and then check how many white or black pixels are in a given bounding box. By setting a BW ratio, I want to find the filled boxes that are the lines I want to filter.

The input I have is a lot of images that only have a letter/character or line rotated on them. I can rotate them by the minimum rectangle but unfortunately I can't crop them. Do you have any hints or maybe a better idea to check the BW ratio in my rotated box?

analysis_of_single_groups = cv2.connectedComponentsWithStats(rotated_without_box, 4, cv2.CV_32S)

(totalLabels_s_g, label_ids_s_g, values_s_g, centroid_s_g) = analysis_of_single_groups

for i in range(1, totalLabels_s_g):

x = values_s_g[i, cv2.CC_STAT_LEFT]

y = values_s_g[i, cv2.CC_STAT_TOP]

w = values_s_g[i, cv2.CC_STAT_WIDTH]

h = values_s_g[i, cv2.CC_STAT_HEIGHT]

print("x: " + str(x))

crop_img = rotated_without_box[y:y + h, x:x + w].copy()

cv2.imwrite("ta/cropped_" + str(i) + ".png", crop_img)

number_of_white_pix = np.sum(crop_img == 0) # extracting only white pixels

number_of_black_pix = np.sum(crop_img == 255) # extracting only black pixels

bw_ratio = number_of_white_pix / number_of_black_pix

bw_ratio < 0.9