I have trained Deeplabv3+ to detect a car charging socket and now I am able to get 3D information of the object in the real world environment using Realsense depth camera. But I need the orientation of the object as well. After searching I found out there are some Networks (pvNet,PoseCNN etc.) which give directly 6D pose information. Would it be somehow possible to include orientation information without using 6D pose estimation networks? Below an output image is shown. According to that Would it be possible to calculate the orientation of the object ?

1 Answers

It seems like the mask is quite accurate. You can match a circle to the mask, and compute the diff between the full circle and the mask. This diff will give you the location of the upper part of the plug.

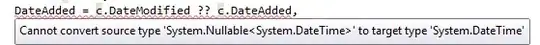

Let's use matlab for simplicity. You can find similar operations implemented in python as well.

First, we work with the mask only:

img = imread('https://i.stack.imgur.com/ttpF8.png');

bw = min(img, [], 3) > 128; % working with a binary mask

We use imfindcircles to find the center and the radius of a circle approximating the detected mask:

approx_rad = sqrt(sum(bw(:))/pi); % an approximation of the radius

[c r] = imfindcircles(bw, [floor(rad*0.8), ceil(rad*2)]); % detect the circle

We now want to produce a binary mask that represents this "ideal" circle. Note that we shrink the ideal mask by a little bit (5 pix) to be more robust to inaccuracies on the boundaries:

[x, y] = meshgrid(1:size(bw,2), 1:size(bw,1));

mask = ((x-c(1)).^2 + (y-c(2)).^2) <= (r-5).^2;

We can inspect the difference between the ideal mask and the original one:

upper_part = mask & ~bw;

imshow(upper_part);

Note that we detected quite robustly the upper part of the plug.

We can user regionprops to get the "center of mass" of the upper part of the plug:

props = regionprops(upper_part, 'Centroid');

black_center = props.Centroid;

Now you have 2 points in 2D representing the center of the plug (c) and the center of the upper part (black_center). The direction of the line connecting them should give you the orientation of the plug.

imshow(bw, 'border', 'tight');

hold on;

scatter(c(1), c(2), '+b'); % center of the plug in blue

scatter(black_center(1), black_center(2), '+m'); % the upper part in magenta

quiver(c(1), c(2), black_center(1)-c(1), black_center(2)-c(2), 'LineWidth', 2); % orientation of the plug

viscircles(c, r, 'linestyle', '-', 'color', [.5, .5, .5], 'linewidth', .5);

- 111,146

- 38

- 238

- 371

-

Could you describe it more specifically how to get the orientation ? I do have x,y,z information but I couldn't figure it out to get angle information for each axis – Dan Py Jul 27 '22 at 19:32

-

@DanPy please see my update. – Shai Jul 28 '22 at 07:53

-

this would give me the orientation in y axis. In order to get the orientation including other axis, found out that using solvePnP from opencv would solve it. But I need at least 5 points to solve the orientation problem. Would it be easier to segment the small holes to get at least 5 points or would you suggest me better solution? – Dan Py Jul 28 '22 at 17:44

-

@DanPy localizing circles/ellipses in images usually leads to very good licalization. I would give it a try. – Shai Jul 28 '22 at 17:50

-

Great. I am really a beginner for computer vision tasks. Is it worth to train neural networks for finding those small holes. or Do you think it is less work to try to get those with traditional computer vision ? – Dan Py Jul 28 '22 at 17:54

-

@DanPy once you have localized the pkug region, you only nned to look inside it. try "classical" methods before training networks – Shai Jul 28 '22 at 17:56