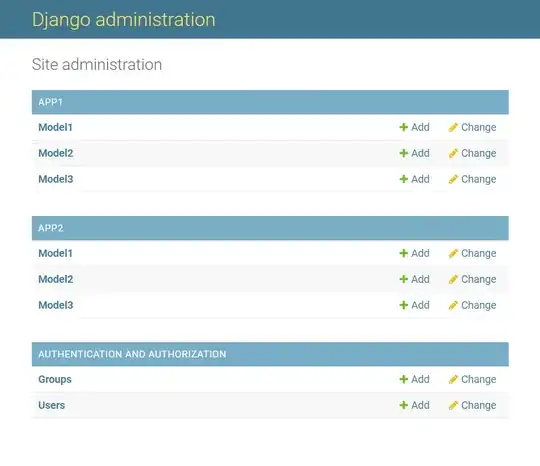

I have a hundreds of ID card images which some of them provided below:

(Disclaimer: I downloaded the images from the Web, all rights (if exist) belong to their respective authors)

(Disclaimer: I downloaded the images from the Web, all rights (if exist) belong to their respective authors)

As seen, they are all different in terms of brightness, perspective angle and distance, card orientation. I'm trying to extract only rectangular card area and save it as a new image. To achieve this, I came to know that I must convert an image to grayscale image and apply some thresholding methods. Then, cv2.findCountours() is applied to threshold image to get multiple vector points. I have tried many methods and come to use cv2.adaptiveThreshold() as it is said that it finds a value for threshold (because, I can't manually set threshold values for each image). However, when I apply it to images, I am not getting what I want. For example:

My desired output should look like this:

It seems like it also includes affine transformations to make the card area (Obama case) proper but I am finding it difficult to understand. If that's possible I'd further extract and save the image separately.

Is there any other method or algorithm that can achieve what I want? It should consider different lighting conditions and card orientations. I am expecting one-fits-all solution given there will be only one rectangle ID card. Please, guide me through this with whatever you think will be of help.

Note that I can't use CNNs as object detector, it must be based on purely image-processing. Thank you.

EDIT: The code for the above results is pretty simple:

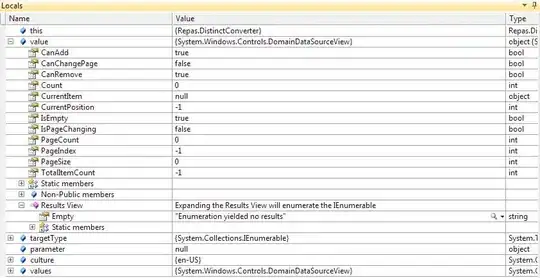

image = cv2.imread(args["image"])

gray_img = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

thresh_img = cv2.adaptiveThreshold(gray_img,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV,51,9)

cnts = cv2.findContours(thresh_img, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

area_treshold = 1000

for cnt in cnts:

if cv2.contourArea(cnt) > area_treshold:

x,y,w,h = cv2.boundingRect(cnt)

print(x,y,w,h)

cv2.rectangle(image, (x, y), (x + w, y + h), (36,255,12), 3)

resize = ResizeWithAspectRatio(image, width=640)

cv2.imshow("image", resize)

cv2.waitKey()

EDIT 2:

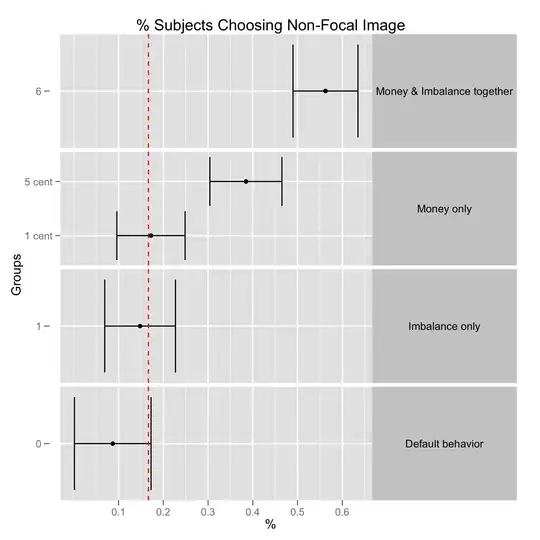

I provide the gradient magnitude images below:

Does it mean I must cover both low and high intensity values? Because the edges of the ID cards at the bottom are barely noticeable.