PROBLEM DESCRIPTION

I'm new to running dask-distributed with TPOT (although fairly seasoned in TPOT on a single machine). I'm trying to set up two worker machines (separate computers) to work with my TPOT run, however only one worker is actually doing anything, even though both are connected to the scheduler.

STEPS TAKEN/REPRODUCE Windows 10, Python 3.7.9, TPOT==0.11.7, scikit-learn==1.0.2, dask==2022.2.0, distributed==2022.2.0, numpy==1.21.4, pandas==1.2.5

- I fire up a Powershell window on my main computer that will also run the script (I'm sure the command prompt would do the same thing).

- I run the command

dask-scheduler - I open a second Powershell window and run the command

dask-worker tcp://127.0.0.1:8786. This connects the main computer to the scheduler (running onlocalhostas a worker). - I open a Powershell window on my second computer and run the command

dask-worker tcp://172.16.1.113:8786. This connects the second computer to the scheduler.

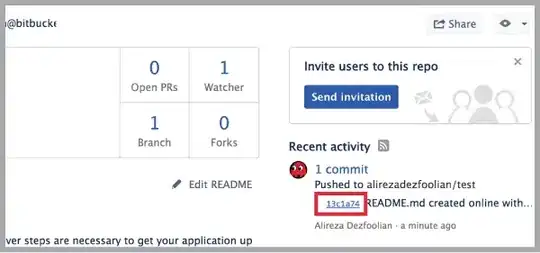

When I refer to http://localhost:8787/status or the scheduler's Powershell window, I can see both workers connected and their resources:

Now, I want to run a TPOT session with Dask. I've created a minimal working example code below for debugging. This dataset closely resembles my use case dataset's shape, hence the dimensions/weight imbalances:

from dask.distributed import Client, Worker

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split, TimeSeriesSplit

from tpot import TPOTClassifier

# ------------------------------------------------------------------------------------------------ #

# START WORKER/CLIENT IN SCRIPT? #

# ------------------------------------------------------------------------------------------------ #

# client = Client("tcp://172.16.1.113:8786")

# worker = Worker("tcp://172.16.1.113:8786")

# # NOTE: I start a woker in a second command prompt instead as doing it here dones't work.

# ------------------------------------------------------------------------------------------------ #

# MAKE CLASSIFICATION DATASET #

# ------------------------------------------------------------------------------------------------ #

X, y = make_classification(n_samples=100000,

n_features=538,

n_informative=200,

n_classes=3,

weights={0:0.996983388,

1:0.001515257,

2:0.001501355,

},

random_state=42,

)

# ------------------------------------------------------------------------------------------------ #

# TRAIN TEST SPLIT #

# ------------------------------------------------------------------------------------------------ #

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.15,

)

print(X_train.shape, X_test.shape, y_train.shape, y_test.shape)

# ------------------------------------------------------------------------------------------------ #

# CREATE THE TPOT CLASSIFIER #

# ------------------------------------------------------------------------------------------------ #

tpot = TPOTClassifier(generations=100,

population_size=40,

offspring_size=None,

mutation_rate=0.9,

crossover_rate=0.1,

scoring='balanced_accuracy',

cv=TimeSeriesSplit(n_splits=3), # Using time series split here

subsample=1.0,

# n_jobs=-1,

max_time_mins=None,

max_eval_time_mins=10, # 5

random_state=None,

# config_dict=classifier_config_dict,

template=None,

warm_start=False,

memory=None,

use_dask=True,

periodic_checkpoint_folder=None,

early_stop=2,

verbosity=2,

disable_update_check=False)

results = tpot.fit(X_train, y_train)

print(tpot.score(X_test, y_test))

tpot.export('tpot_pipeline.py')

# Now check http://localhost:8787/status and resources on both worker machines

When I run this script (on the main machine), the main machine kicks in and starts using up all the resources as it should:

...HOWEVER, the second machine isn't touched at all. I don't have a screenshot of it, but the CPU and memory are not being used at all by this process.

POSSIBILITIES

- Could it be a memory issue? My main machine has 16Gb of RAM, the second has 8Gb. I see that at least 5Gb is being used on the main machine when I start the script. Maybe it's exceeding the limit of the second machine, therefore not being used at all?

- Could it be that I'm not setting it up properly with the steps as I've described them above? Note, I didn't use

Client()anywhere, but when I tried that in the script (instead of using a separatedask-workerPowershell window on the main machine), only the second machine was working. So that leads me to think it's NOT a memory issue, and I'm just not configuring things properly. - I'm not explicitly setting

n_jobsin TPOT, orn_procs/n_threads_per_proc(or whatever the parameters are) when setting up the workers? I would assume this would mean that it should use up all available resources, which it's clearly doing on the main machine? - Something else? (as I'm new to clustered TPOT runs)