I wrote a class called Enumerable that implements IEnumerable<T> and IEnumerator<T> using a lambda function as an alternative to using a generator. The function should return null to indicate the end of the sequence. It allows me to write code such as the following:

static class Program

{

static IEnumerable<int> GetPrimes()

{

var i = 0;

var primes = new int[] { 2, 3, 5, 7, 11, 13 };

return new Enumerable<int>(() => i < primes.Length ? primes[i++] : null);

}

static void Main(string[] args)

{

foreach (var prime in GetPrimes())

{

Console.WriteLine(prime);

}

}

}

Here is the implementation of this class (with some of the code snipped out for brevity and clarity):

class Enumerable<T> : IEnumerable<T>, IEnumerator<T> where T : struct

{

public IEnumerator<T> GetEnumerator()

{

return this;

}

public T Current { get; private set; }

public bool MoveNext()

{

T? current = _next();

if (current != null)

{

Current = (T)current;

}

return current != null;

}

public Enumerable(Func<T?> next)

{

_next = next ?? throw new ArgumentNullException(nameof(next));

}

private readonly Func<T?> _next;

}

Now, here is the problem: when I change the constraint where T : struct to where T : notnull to also support reference types I get the following errors on the last line of the method GetPrimes:

error CS0037: Cannot convert null to 'int' because it is a non-nullable value type

error CS1662: Cannot convert lambda expression to intended delegate type because some of the return types in the block are not implicitly convertible to the delegate return type

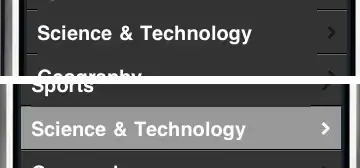

It seems that the Enumerable constructor expects a Func<int> rather than a Func<int?> as I coded it:

I don't understand why, if T is int, T? is also treated as int rather than int?. The documentation says that this is the expected behavior for unconstrained type parameters but what's the point of having the notnull constraint if it doesn't allow me to treat T and T? as two different things?