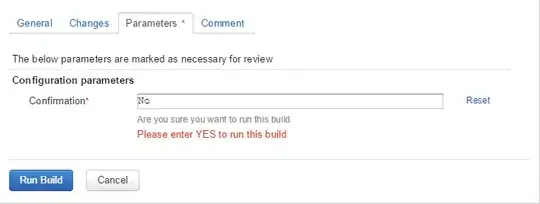

I am building a pipeline to copy files from Sharepoint to Azule Blob Storage at work. After reading some documentation, I was able to create a pipeline that only copies certain files. However, I would like to automate this pipeline by using dynamic file paths to specify the source files in Sharepoint. In other words, when I run the pipeline on 2022/07/14, I want to get the files from the Sharepoint folder named for that day, such as "Data/2022/07/14/files". I know how to do this with PowerAutomate, but my company does not want me to use PowerAutomate. The current pipeline looks like the attached image. Do I need to use parameters in the URL of the source dataset?

Any help would be appreciated. Thank you.