Our stack is basically nginx + php-fpm. We have the same setup in many projects, and it works fine. Except in one project it keeps going our of memory.

The PHP-FPM container keeps accumulating memory until the php-fpm processes start to get OOMKilled ending with pod termination.

We have a discrepancy between cloud monitoring and top in containers.

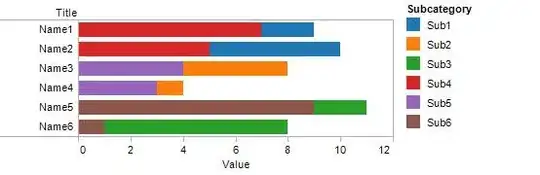

In monitoring (Google Cloud Metrics Explorer) shows ever-rising mem charts

while top inside the container is very modest.

Notice that k9a reports also high usage for one of the pods in %MEM/L column.

Meanwhile top reports are very similar between "healthy" and "unhealthy" pods.

Notice that the amount of php-fpm processes and their mem consumption (200-250) is roughly the same in both pods.

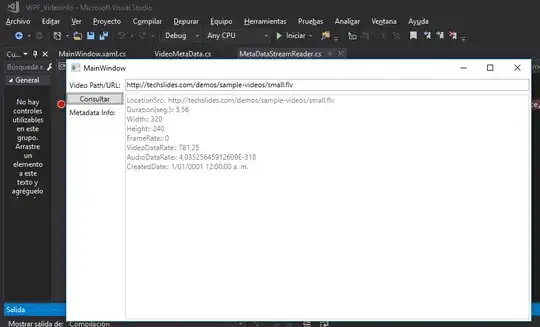

Unhealthy container log:

The number of PHP-FPM processes is limited to a small number. We monitored mem consumption of individual processes over time, and they all look the same:

The memory is always freed by php-fpm after serving a request.

We have noticed, that the memory climb is increased by traffic. No traffic - flat chart.

It seems, that if the pod does not receive traffic for a longer period of time, it starts to free it.

We noticed, that we can simulate the problem by allocating shared memory. Except in the problematic container, we don't see any shared memory being allocated. ipcs is empty.

We noticed that the issue goes away by using Memcached by the application.

What is perplexing to us - where is the hidden memory? The discrepancy between processes and the pod. How could we see the "dark" memory?

EDIT:

We did one more experiment with CPU with a bit surprising results: