I am attempting to find the center of several sets of 3D points on a sphere. Each set is comprised of three or more points that fall on the arc of a circle, but not perfectly as they have been supplied by an object detection algorithm, so there is some inherent error in these points. This is for me where the difficulty lies, I cannot simply solve the equations, I need to try and minimize variance in radius to this point across all three-point sets.

Currently, I am calculating a plane of best fit for each set of points. By calculating the radius (perpendicular distance) to this normal for each set and determining the variance I can figure out which plane (normal or center of rotation) fits all three sets the best. I am also doing this for an average of the three planes and for two planes after throwing out the plane that agrees least with the other two. So I am getting a pretty decent approximation currently.

My question is, does anyone know how to implement in Python some sort of function that can help me find a normal vector through these points that minimize the variance in radius for all sets. I suspect this won't be far off my current approximation, but am looking for the most accurate solution to this problem.

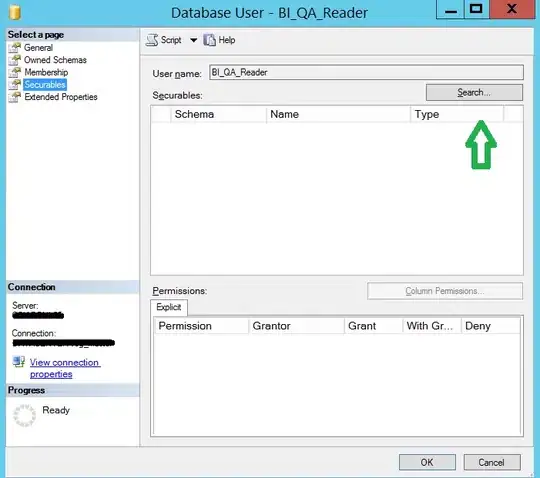

The picture below shows the results of what I am currently doing. The pink points represent the points I am using, labeled 0,1,2 for each set of points. The blue dots represent the normal vector projected to the surface of the sphere. The orange is the average of the three blue dots projected to the surface of the sphere. Ignore green they are not relevant to this. To minimize the variance my code is currently telling me that axis (blue dot) 0 results in the least variance in radius for the data set as a whole, but I highly doubt it is the best fitting point.