I am triyng to use scipy curve_fit function to fit a gaussian function to my data to estimate a theoretical power spectrum density. While doing so, the curve_fit function always return the initial parameters (p0=[1,1,1]) , thus telling me that the fitting didn't work. I don't know where the issue is. I am using python 3.9 (spyder 5.1.5) from the anaconda distribution on windows 11. here a Wetransfer link to the data file https://wetransfer.com/downloads/6097ebe81ee0c29ee95a497128c1c2e420220704110130/86bf2d

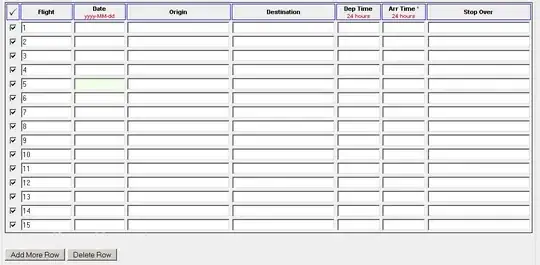

Here is my code below. Can someone tell me what the issue is, and how can i solve it? on the picture of the plot, the blue plot is my experimental PSD and the orange one is the result of the fit.

on the picture of the plot, the blue plot is my experimental PSD and the orange one is the result of the fit.

import numpy as np

import math

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

import scipy.constants as cst

File = np.loadtxt('test5.dat')

X = File[:, 1]

Y = File[:, 2]

f_sample = 50000

time=[]

for i in range(1,len(X)+1):

t=i*(1/f_sample)

time= np.append(time,t)

N = X.shape[0] # number of observation

N1=int(N/2)

delta_t = time[2] - time[1]

T_mes = N * delta_t

freq = np.arange(1/T_mes, (N+1)/T_mes, 1/T_mes)

freq=freq[0:N1]

fNyq = f_sample/2 # Nyquist frequency

nb = 350

freq_block = []

# discrete fourier tansform

X_ft = delta_t*np.fft.fft(X, n=N)

X_ft=X_ft[0:N1]

plt.figure()

plt.plot(time, X)

plt.xlabel('t [s]')

plt.ylabel('x [micro m]')

# Experimental power spectrum on both raw and blocked data

PSD_X_exp = (np.abs(X_ft)**2/T_mes)

PSD_X_exp_b = []

STD_PSD_X_exp_b = []

for i in range(0, N1+2, nb):

freq_b = np.array(freq[i:i+nb]) # i-nb:i

psd_b = np.array(PSD_X_exp[i:i+nb])

freq_block = np.append(freq_block, (1/nb)*np.sum(freq_b))

PSD_X_exp_b = np.append(PSD_X_exp_b, (1/nb)*np.sum(psd_b))

STD_PSD_X_exp_b = np.append(STD_PSD_X_exp_b, PSD_X_exp_b/np.sqrt(nb))

plt.figure()

plt.loglog(freq, PSD_X_exp)

plt.legend(['Raw Experimental PSD'])

plt.xlabel('f [Hz]')

plt.ylabel('PSD')

plt.figure()

plt.loglog(freq_block, PSD_X_exp_b)

plt.legend(['Experimental PSD after blocking'])

plt.xlabel('f [Hz]')

plt.ylabel('PSD')

kB = cst.k # Boltzmann constant [m^2kg/s^2K]

T = 273.15 + 25 # Temperature [K]

r = (2.8 / 2) * 1e-6 # Particle radius [m]

v = 0.00002414 * 10 ** (247.8 / (-140 + T)) # Water viscosity [Pa*s]

gamma = np.pi * 6 * r * v # [m*Pa*s]

Do = kB*T/gamma # expected value for D

f3db_o = 50000 # expected value for f3db

fc_o = 300 # expected value pour fc

n = np.arange(-10,11)

def theo_spectrum_lorentzian_filter(x, D_, fc_, f3db_):

PSD_theo=[]

for i in range(0,len(x)):

# print(i)

psd_theo=np.sum((((D_*Do)/2*math.pi**2)/((fc_*fc_o)**2+(x[i]+n*f_sample)

** 2))*(1/(1+((x[i]+n*f_sample)/(f3db_*f3db_o))**2)))

PSD_theo= np.append(PSD_theo,psd_theo)

return PSD_theo

popt, pcov = curve_fit(theo_spectrum_lorentzian_filter, freq_block, PSD_X_exp_b, p0=[1, 1, 1], sigma=STD_PSD_X_exp_b, absolute_sigma=True, check_finite=True,bounds=(0.1, 10), method='trf', jac=None)

D_, fc_, f3db_ = popt

D1 = D_*Do

fc1 = fc_*fc_o

f3db1 = f3db_*f3db_o

print('Diffusion constant D = ', D1, ' Corner frequency fc= ',fc1, 'f3db(diode,eff)= ', f3db1)