I have a legacy windows form application (32 bits) where I initialize it in several instances to multi process thousands of data "in parallel".

When I run this manual application (Session 1) using, for example, 8 instances, each instance runs with an average of 200mb of memory usage. That way I don't have a problem with out of memory, because I don't take the risk of an instance exceeding 1,300/1600mb and crashing.

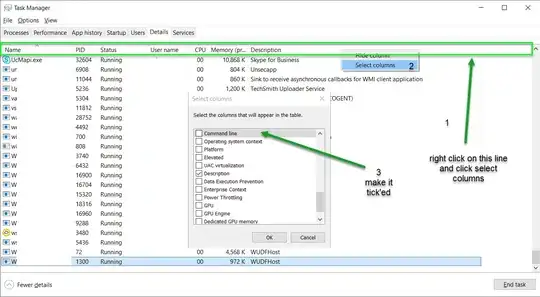

However, when I configure the windows scheduler to run this application via service (Session 0), for some reason, it considers the sum megabytes of all instances, and when it reaches approximately 1,300mb, the application crashes, due to out of memory.

Why is the behavior different between session's regarding memory usage?

In Resume:

"You have a 32-bit winforms app ("A.exe") that somehow spawns N child processes (B1, B2, etc...). None of the child processes (Bnn.exe) can exceed 1300MB. When you start A.exe manually (double-clicking), all of the children are OK. When you start A.exe from Windows task manager, all the memory in all the children are counted as belonging to "A.exe"." by @paulsm4

EDIT:

Syntax I used in Windows Task Scheduler to invoke:

Code in A.exe that spawns a child process

Call Shell("C:\Test\A.exe login;pass", vbNormalFocus)