The goal is to understand what should be tuned in order for the Java process to stop restarting itself.

We have a Java Springboot backend application with Hazelcast running that restarts instead of garbage collecting.

Environment is:

Amazon Corretto 17.0.3

The only memory tuning parameter supplied is:

-XX:+UseContainerSupport -XX:MaxRAMPercentage=80.0

The memory limit in kubernetes is 2Gi so the container gets 1.6Gi

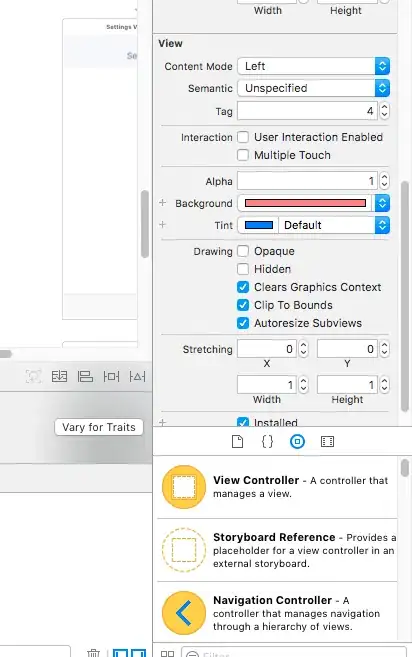

Graphs of memory usage:

The huge drop towards the end is where I performed a heap dump. Performing the dump lead to a drastic decrease in memory usage (due to a full GC?).

The GC appears to be working against me here. If the memory dump was not performed, the container hits what appears to be a memory limit, it is restarted by kubernetes, and it continues in this cycle. Are there tuning parameters that are missed, is this a clear memory leak (perhaps due to hazelcast metrics) https://github.com/hazelcast/hazelcast/issues/16672)?