I am working on a Reinforcement Learning problem in StableBaselines3, but I don't think that really matters for this question. SB3 is based on PyTorch.

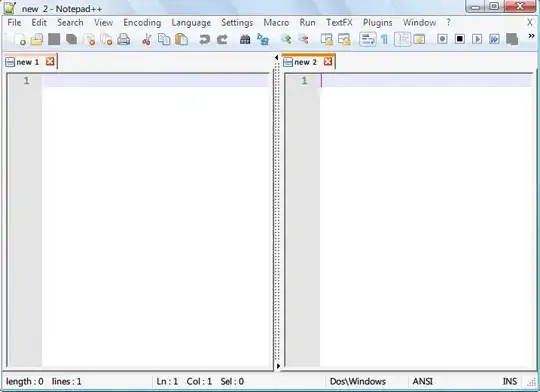

I have 101 input features, and even though I designed a neural architecture with the first layer having only 64 nodes, the network still works. Below is a screenshot of my model architecture:

I am concerned because I thought that the first layer of the neural network needed to have a number of nodes equal to the number of input features.

Does PyTorch include an input layer by default, and doesn't display it? If so, how can I know and control what the activation functions etc. are for the input layer?

EDIT: Here are my imports and basic code, in response to Michael's comment.

import gym

from gym import Env

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from gym import spaces

from gym.utils import seeding

from stable_baselines3.common.vec_env import DummyVecEnv, SubprocVecEnv

from stable_baselines3.common.utils import set_random_seed

from stable_baselines3.common.evaluation import evaluate_policy

from stable_baselines3.common.env_util import make_vec_env

from stable_baselines3 import PPO

import math

import random

import torch as th

from sb3_contrib.common.maskable.policies import MaskableActorCriticPolicy

from sb3_contrib.common.wrappers import ActionMasker

from sb3_contrib.ppo_mask import MaskablePPO

from sb3_contrib.common.envs import InvalidActionEnvDiscrete

from sb3_contrib.common.maskable.evaluation import evaluate_policy

from sb3_contrib.common.maskable.utils import get_action_masks

env = MyCustomEnv(....)

env = ActionMasker(env, mask_fn) # Wrap to enable masking

# Defining custom neural network architecture

mynetwork = dict(activation_fn=th.nn.LeakyReLU,

net_arch=[dict(pi=[64, 64], vf=[64, 64])])

# Maskable PPO behaves just like regular PPO

model = MaskablePPO(MaskableActorCriticPolicy, env, verbose=1, learning_rate=0.0005, gamma=0.975, seed=10, batch_size=256, clip_range=0.2,

tensorboard_log="./log1/", policy_kwargs=mynetwork)

# To get the screenshot I gave

print(model.policy)