I am new to Azure Databricks and Event Hubs. I have been struggling for days to stream data from Databricks using Spark and Kafka API to an event hub.The data I want to stream is in a .csv file. The stream is starting but the Dashboard with the Input Rate is blank. Here is a code snippet:

def write_to_event_hub(df:DataFrame, topic: str, bootstrap_servers: str, config: str, checkpoint_path: str):

print("Producing to even hub via Kafka")

df.writeStream\

.format("kafka")\

.option("topic", topic)\

.option("kafka.bootstrap.servers", bootstrap_servers)\

.option("kafka.sasl.mechanism", "PLAIN")\

.option("kafka.security.protocol", "SASL_SSL")\

.option("kafka.sasl.jaas.config", config)\

.option("checkpointLocation", checkpoint_path)\

.start()

write_to_event_hub(streaming_df, topic, bootstrap_servers, sasl_jaas_config, "./checkpoint")

And the code used to generated data:

streaming_df = spark.readStream.option("header", "true").schema(location_schema).csv(f"{path}").select("*")

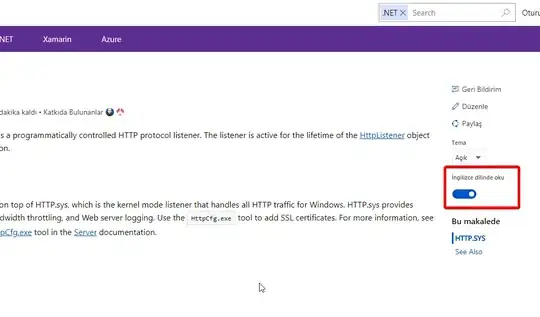

And I also attached a picture with the configuration. The topic is the name of the event hub, and the connection string has the format: <Endpoint=sb://XXXX.servicebus.windows.net/;SharedAccessKeyName=XXXX;SharedAccessKey=XXX=;EntityPath=XXXX>

(I want to connect to one event hub in the namespace) Probably something is wrong with the way I read the data I want to stream or with the configuration. Any idea?

Thank you!