In my OVH Managed Kubernetes cluster I'm trying to expose a NodePort service, but it looks like the port is not reachable via <node-ip>:<node-port>.

I followed this tutorial: Creating a service for an application running in two pods. I can successfully access the service on localhost:<target-port> along with kubectl port-forward, but it doesn't work on <node-ip>:<node-port> (request timeout) (though it works from inside the cluster).

The tutorial says that I may have to "create a firewall rule that allows TCP traffic on your node port" but I can't figure out how to do that.

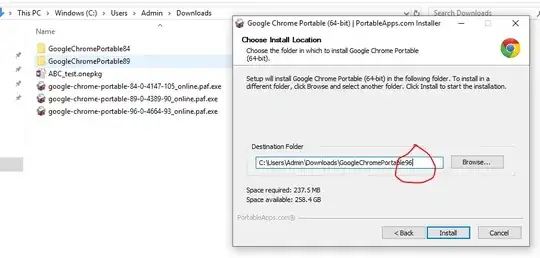

The security group seems to allow any traffic: