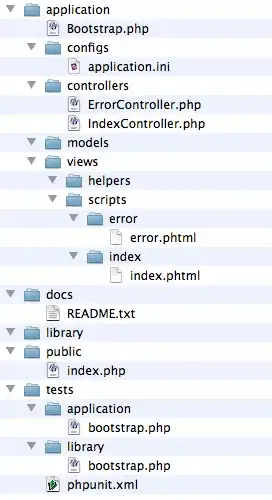

I have a schema in kafka and I need that every time I post a post in this topic, the schema that I registered checks if it is in the same pattern that is being sent.

Curl post:

curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" --data '{"schema": "{\"type\":\"record\",\"name\":\"Operacao\",\"namespace\":\"data.brado.operacao\",\"fields\":[{\"name\":\"id_operacao\",\"type\":\"string\"},{\"name\":\"tipo_container\",\"type\":\"string\"}, {\"name\":\"descricao_operacao\",\"type\":\"string\"},{\"name\":\"entrega\",\"type\":\"string\"},{\"name\":\"coleta\",\"type\":\"string\"},{\"name\":\"descricao_checklist\",\"type\":\"string\"},{\"name\":\"cheio\",\"type\":\"string\"},{\"name\":\"ativo\",\"type\":\"string\"},{\"name\":\"tipo_operacao\",\"type\":\"string\"} ]}"}' http://localhost:38081/subjects/teste/versions

What I need is that when I make a post in the topic it doesn't allow me to send it if it doesn't have this pattern

I was supposed to accuse an error here, because I'm not sending the right schema

And it would work in that case

Can anyone help me how to do this check in schema? I've looked everywhere I've found and I haven't found any answers to this.