I've got a Kafka-to-BigQuery Dataflow pipeline where I am consuming from multiple topics and using dynamic destinations to output to the appropriate BigQuery tables for each topic.

The pipeline runs smoothly when only one worker is involved.

However when it auto-scales or is manually configured to more than 1 worker, it completely stalls at the BigQuery write transform and data staleness grows and grows. The growth of data staleness seems to happen regardless of the amount of data streaming from Kafka. No table rows are inserted into BigQuery at this point.

No errors are reported to the job or worker logs.

Specifically, internal to the BigQuery write transform, it is stalling during a reshuffle before the write.

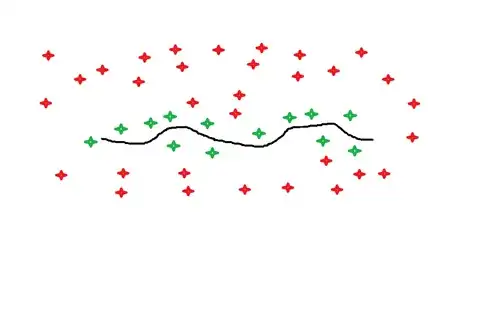

Within the Reshuffle that occurs during the BigQuery write transform, there is a GroupByKey where the pipeline stalls, as shown below:

I can see that the Window.into step above is working fine:

However, this problem is not isolated in the BigQuery to the write transform. If I add a Reshuffle step in the pipeline, for example after the "Extract from Kafka record" step, the problem of a stalling pipeline appears in this new Reshuffle step at the same GroupByKey.

I am using Beam SDK version 2.39.0.

The pipeline was designed following the example of the Kafka-to-BigQuery template found here. Below is an overview of the pipeline code. This has also been attempted with Fixed Windowing

PCollection<MessageData> messageData = pipeline

.apply("Read from Kafka",

KafkaReadTransform.read(

options.getBootstrapServers(),

options.getInputTopics(),

kafkaProperties))

.apply("Extract from Kafka record",

ParDo.of(new KafkaRecordToMessageDataFn()));

/*

* Step 2: Validate Protobuf messages and convert to FailsafeElement

*/

PCollectionTuple failsafe = messageData

.apply("Parse and convert to Failsafe", ParDo.of(new MessageDataToFailsafeFn())

.withOutputTags(Tags.FAILSAFE_OUT, TupleTagList.of(Tags.FAILSAFE_DEADLETTER_OUT)));

/*

* Step 3: Write messages to BigQuery

*/

WriteResult writeResult = failsafe.get(Tags.FAILSAFE_OUT)

.apply("Write to BigQuery", new BigQueryWriteTransform(project, dataset, tablePrefix));

/*

* Step 4: Write errors to BigQuery deadletter table

*/

failsafe.get(Tags.FAILSAFE_DEADLETTER_OUT)

.apply("Write failsafe errors to BigQuery",

new BigQueryDeadletterWriteTransform(project, dataset, tablePrefix));

writeResult.getFailedInsertsWithErr()

.apply("Extract BigQuery insertion errors", ParDo.of(new InsertErrorsToFailsafeRecordFn()))

.apply("Write BigQuery insertion errors",

new BigQueryDeadletterWriteTransform(project, dataset, tablePrefix));

BigQueryWriteTransform, where the pipeline is stalling:

BigQueryIO.<FailsafeElement<MessageData, ValidatedMessageData>>write()

.to(new MessageDynamicDestinations(project, dataset, tablePrefix))

.withFormatFunction(TableRowMapper::toTableRow)

.withCreateDisposition(BigQueryIO.Write.CreateDisposition.CREATE_NEVER)

.withWriteDisposition(BigQueryIO.Write.WriteDisposition.WRITE_APPEND)

.withFailedInsertRetryPolicy(InsertRetryPolicy.neverRetry())

.withExtendedErrorInfo()

.optimizedWrites()

Formatting function:

public static TableRow toTableRow(FailsafeElement<MessageData, ValidatedMessageData> failsafeElement) {

try {

ValidatedMessageData messageData = Objects.requireNonNull(failsafeElement.getOutputPayload());

byte[] data = messageData.getData();

String messageType = messageData.getMessageType();

long timestamp = messageData.getKafkaTimestamp();

return TABLE_ROW_CONVERTER_MAP.get(messageType).toTableRow(data, timestamp);

} catch (Exception e) {

log.error("Error occurred when converting message to table row.", e);

return null;

}

}

KafkaReadTransform looks like this:

KafkaIO.<String, byte[]>read()

.withBootstrapServers(bootstrapServer)

.withTopics(inputTopics)

.withKeyDeserializer(StringDeserializer.class)

.withValueDeserializer(ByteArrayDeserializer.class)

.withConsumerConfigUpdates(kafkaProperties);

I have also tried this with Fixed Windowing in place after the extraction of message data from the Kafka record, even though the template does not appear to use windowing:

PCollection<MessageData> messageData = pipeline

.apply("Read from Kafka",

KafkaReadTransform.read(

options.getBootstrapServers(),

options.getInputTopics(),

kafkaProperties))

.apply("Extract from Kafka record",

ParDo.of(new KafkaRecordToMessageDataFn()))

.apply("Windowing", Window.<MessageData>into(FixedWindows.of(WINDOW_DURATION))

.withAllowedLateness(LATENESS_COMPENSATION_DURATION)

.discardingFiredPanes());

I'm out of ideas and don't know DataFlow well enough to know how to diagnose this problem further.