I have two functional Keras models in the same level (same input and output shape), one of them is pre-trained, I would like to combine them horizontally and then retrain the whole model. I mean I want to initialize the pretrained with its weights and the other one randomly. How can I horizontally combine them by adding them in branches (not concatenate)?

def define_model_a(input_shape, initializer, outputs = 1):

input_layer = Input(shape=(input_shape))

# first path

path10 = input_layer

path11 = Conv1D(filters=1, kernel_size=3, strides=1, padding="same", use_bias = True, kernel_initializer=initializer)(path10)

path12 = Lambda(lambda x: abs(x))(path11)

output = Add()([path10, path12])

define_model_a = Model(inputs=input_layer, outputs=output)

define_model_a._name = 'model_a'

return define_model_a

def define_model_b(input_shape, initializer, outputs = 1):

input_layer = Input(shape=(input_shape))

# first path

path10 = input_layer

path11 = Conv1D(filters=1, kernel_size=3, strides=1, padding="same", use_bias = True, kernel_initializer=initializer)(path10)

path12 = ReLU()(path11)

path13 = Dense(1, use_bias = True)(path12)

output = path13

define_model_b = Model(inputs=input_layer, outputs=output)

define_model_b._name = 'model_b'

return define_model_b

def define_merge_interpretation()

????

????

output = Add()(model_a, model_b)

model = Model(inputs=input_layer, outputs=output)

return model

initializer = tf.keras.initializers.HeNormal()

model_a = define_model_a(input_shape, initializer, outputs = 1)

model_b = define_model_b(input_shape, initializer, outputs = 1)

model_a.load_weights(load_path)

merge_interpretation = def merge_interprettation( )

history = merge_interpretation.fit(......

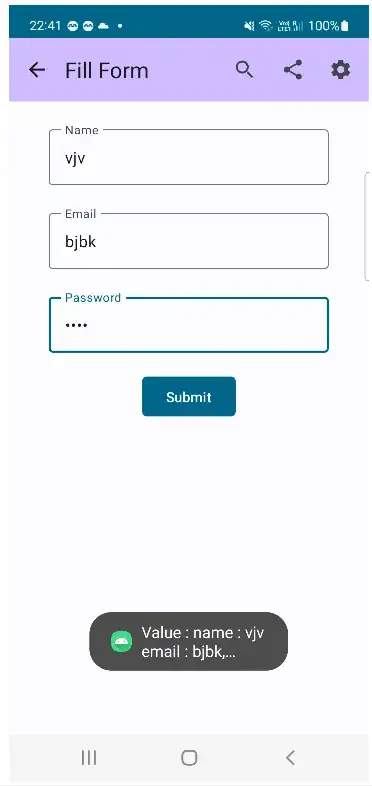

As reference, I am looking for a final structure like this in the image, but with some pretrained branches.