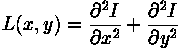

I am trying to get the features which are important for a class and have a positive contribution (having red points on the positive side of the SHAP plot).

I can get the shap_values and plot the shap summary for each class (e.g. class 2 here) using the following code:

import shap

explainer = shap.TreeExplainer(clf)

shap_values = explainer.shap_values(X)

shap.summary_plot(shap_values[2], X)

From the plot I can understand which features are important to that class. In the below plot, I can say alcohol and sulphates are the main features (that I am more interested in).

However, I want to automate this process, so the code can rank the features (which are important on the positive side) and return the top N. Any idea on how to automate this interpretation?

I need to automatically identify those important features for each class. Any other method rather than shap that can handle this process would be ideal.