Looks like not only roc_auc_score doesn't work with non-numerical y_score, but perhaps does so for a good reason, since using anything that's in a numerical rounded / final class (aka final class prediction 1,2,3 etc) form is not right either. Lets see why.

Documentation states:

Reading further for your (binary case) here:

So it looks like roc_auc_score expects only numerical values for y_pred accepting either probability estimates or non-thresholded decision values ( decision functions outputs where sometimes you cant get prob outputs) to calculate your area under the curve / score...

And while not stated explicitly one may even assume/say it should handle final class predictions (in numerical form) as inputs as well on top of above.

(BUT!)

Reading about roc auc and visualizing it you can see that the main idea of a ROC curve and area under (AUC) is to characterize the trade-offs of false positive rate vs true positive rate at ALL prediction thresholds. So sounds like supplying end classes might not be the best idea since you would round all these details. Lets take a look:

### do imports

from sklearn.datasets import load_breast_cancer

from sklearn.linear_model import LogisticRegression

from sklearn import metrics

from IPython.core.interactiveshell import InteractiveShell

InteractiveShell.ast_node_interactivity = "all"

### get & fit data

X, y = load_breast_cancer(return_X_y=True)

clf = LogisticRegression(solver="liblinear").fit(X, y)

### get predictions in different ways

class_prediction = clf.predict(X)

prob_prediction_class_1 = clf.predict_proba(X)[:, 1]

non_threshold_preds = clf.decision_function(X)

### class predictions

print('class predictions aucs')

f'roc_auc_score = {metrics.roc_auc_score(y,class_prediction)}'

false_positive_rate, true_positive_rate, thresholds = metrics.roc_curve(y, class_prediction)

f'metrics auc score = {metrics.auc(false_positive_rate, true_positive_rate)}'

print(f'`````````````````````````````````````````````````````')

### proba predictions

print('proba predictions')

f'roc_auc_score = {metrics.roc_auc_score(y,prob_prediction_class_1)}'

false_positive_rate, true_positive_rate, thresholds = metrics.roc_curve(y, prob_prediction_class_1)

f'metrics auc score = {metrics.auc(false_positive_rate, true_positive_rate)}'

print('`````````````````````````````````````````````````````')

### non thresholded preds

print('non thresholded preds aucs')

f'roc_auc_score = {metrics.roc_auc_score(y,non_threshold_preds)}'

false_positive_rate, true_positive_rate, thresholds = metrics.roc_curve(y, non_threshold_preds)

f'metrics auc score = {metrics.auc(false_positive_rate, true_positive_rate)}'

print('`````````````````````````````````````````````````````')

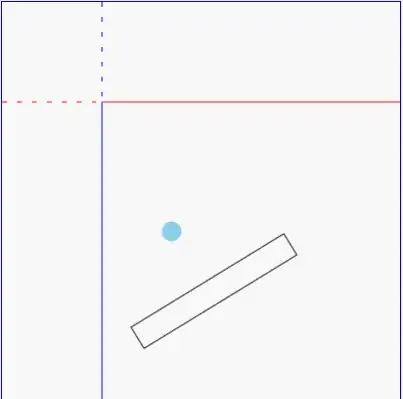

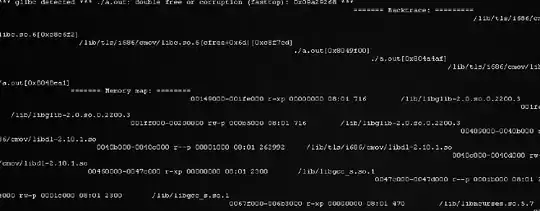

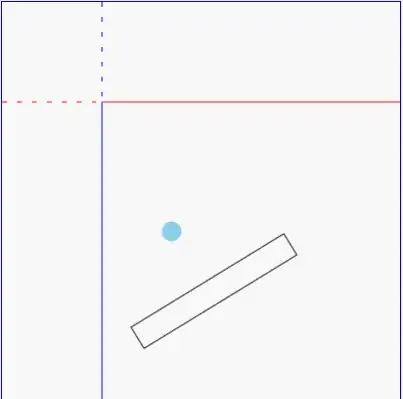

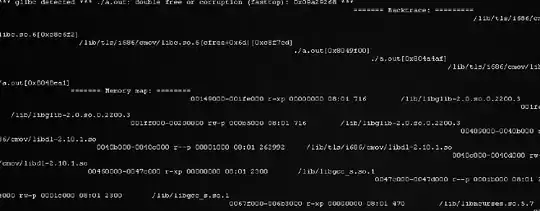

Outputs:

As you can see above, inputting (final) class prediction aka 0 or 1 (which is not included in docs as one of proper inputs, perhaps since using such considers pretty much just 1 threshold of 0.5) vs say probability of class 1 aka 0.2, 0.9 etc gives you a different metric VS when you use what documentation states to use = probability OR non-thresholded decision values...

PS

Interestingly enough per my original comment under your question...

It was my original understanding or old knowledge that one must encode labels before fitting a model, but quickly looking over some of sklearns models (logistic regression for example) CODE (line 1730 for example) I actually see that it calls label encoder for non numerical values automatically...

So I guess I mistook best practice for a must. However I am not sure if all models do so explicitly and also not sure if any of them are able to output transformed labels (transforming back from numerical to text representation) at .predict() in a similar manner as when labels are encoded at .fit() so better off just transforming all to numerical...

PPS

If you are checking quality of your models it would be good to use roc_auc_curves AND precision_recall_curves since in cases when classes are imbalanced your roc_auc_curve can look good while precision_recall_curve may not look good at all.