So I'm using this kaggle dataset (https://www.kaggle.com/datasets/shuyangli94/food-com-recipes-and-user-interactions), and I need need to convert the contents of the ingredient IDs field to be actual lists rather than strings representing lists, such that I can then explode the ingredients and then create a matrix of recipes by ingredients (see pictures below).

When I use this code recipes_exploded["ingredient_ids"] = recipes_exploded['ingredient_ids'].apply(lambda x : ast.literal_eval(x)) in Dask, I get the error that this is a custom function that Dask cannot use.

What would be alternatives (code) to solving this issue? I found this other post dealing with a similar issue (use ast.literal_eval with dask Series), and comments suggested that it was just poor creation of a dataset and serialization should've been used. The problem with this is that it isn't my dataset; my primary coding language is in R, and I'm trying to figure out how to remedy the issue.

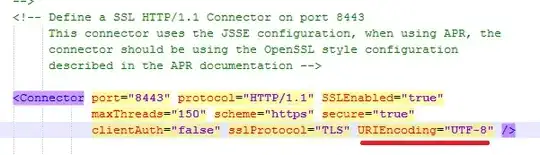

This is what I'm trying to fix:

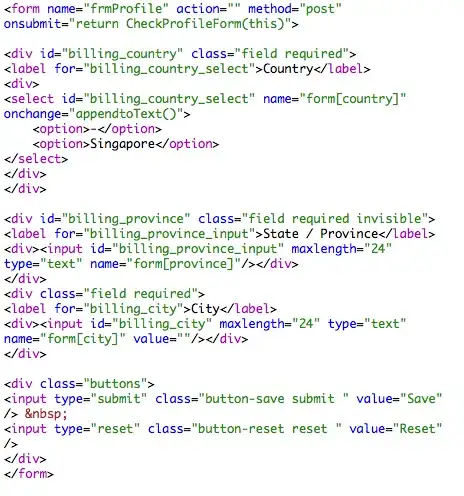

This is my code in Pandas to go from the second picture to the first:

import ast

recipes_exploded = recipes.copy(deep=True)

recipes_exploded["ingredient_ids"] = recipes_exploded['ingredient_ids'].apply(lambda x : ast.literal_eval(x)) #making each ingedient_id, it's own observation, such that it can be exploded.

recipes_exploded = recipes_exploded.explode(column="ingredient_ids", ignore_index=True)

recipes_exploded[['counts']] = recipes_exploded\

.groupby(by = ['ingredient_ids'], as_index = False)['ingredient_ids'].count()

recipes_exploded = recipes_exploded[['recipe_id', 'ingredient_ids', 'counts']]

recipes_exploded[['count']] = '1'

recipes_exploded = recipes_exploded.drop('counts', axis = 1)

recipes_exploded = recipes_exploded.pivot_table(index = 'recipe_id', columns='ingredient_ids', values = 'count', fill_value = "0", aggfunc='sum')