Some 2 years ago, I asked a question here and got a satisfying answer. Think is, recently the script has been returning a lot of errors, over 30%, so I decided to change my approach and just ask a more generic question, thinking with the original images instead of the processed ones I used in my original question.

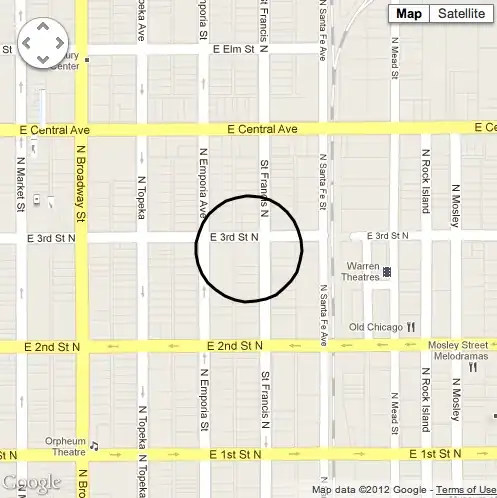

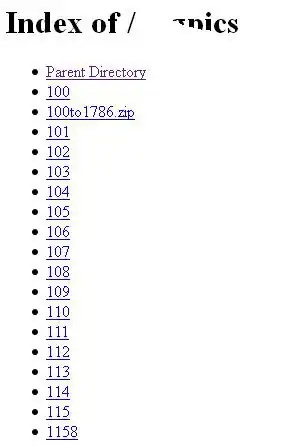

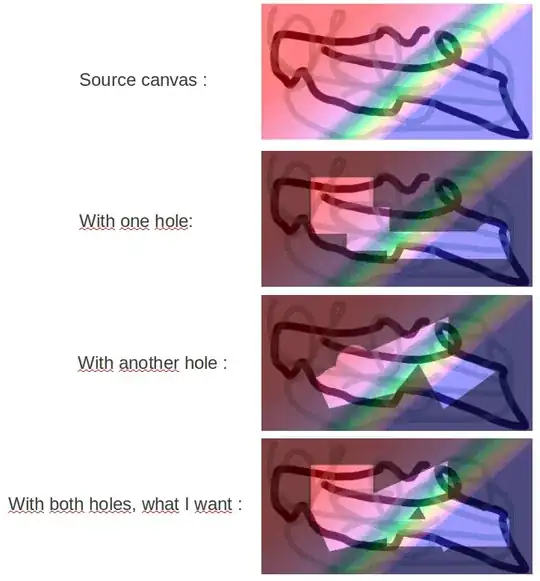

Here are the originals:

As you can see, these examples are slices of the original scanned documents.

The problem lies in their inconsistent quality, both in the original printing and the subsquent scanning. Sometimes the digits stand out, sometimes not. Sometimes I have a darker gray, sometimes lighter. Sometimes I get a faulty print, with white lines showing where the printer failed to put ink.

Furthermore, their font is way to "tight", as in, the digits are too close to each other, sometimes even touching, precluding me from simply separating each digit in order to clean and OCR individualy.

I've tried various approaches with OpenCV, such as various blurs:

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray,(5,5),0) # Innitial cleaning

s_thresh = cv2.threshold(blurred, 120, 255, cv2.THRESH_BINARY_INV)[1]

o_thresh = cv2.threshold(blurred, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

ac_thres = cv2.adaptiveThreshold(blurred,255,cv2.ADAPTIVE_THRESH_MEAN_C,cv2.THRESH_BINARY_INV,5,10)

ag_thres = cv2.adaptiveThreshold(blurred, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 5, 4)

And also connected components:

ret, thresh = cv2.threshold(img, 100, 255, cv2.THRESH_BINARY)

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, cv2.getStructuringElement(cv2.MORPH_RECT, (2,2))

gray_img = cv2.cvtColor(opening, cv2.COLOR_BGR2GRAY)

_, blackAndWhite = cv2.threshold(gray_img, 127, 255, cv2.THRESH_BINARY_INV)

nlabels, labels, stats, centroids = cv2.connectedComponentsWithStats(blackAndWhite, None, None, None, 8, cv2.CV_32S)

sizes = stats[1:, -1] # get CC_STAT_AREA component

img2 = np.zeros((labels.shape), np.uint8)

for i in range(0, nlabels - 1):

if sizes[i] >= 4:

img2[labels == i + 1] = 255

res = cv2.bitwise_not(img2)

gaussian = cv2.GaussianBlur(res, (3, 3), 0)

unsharp_image = cv2.addWeighted(res, 0.3, gaussian, 0.7, 0, res)

But I still get results that are inconsistent at best.

Should I change my approach? What would you guys recommend?