I'm trying to add a series of constraints which make use of an indicator function, but it seems to be breaking the solver.

This is the original formulation of the constraint:

Which has to be broken down into a form suitable for Pyscipopt:

I'm under the impression this is the big-M method of optimization, and seems like it should work in theory. However, Pyscipopt does not seem to be able to solve it, returning an error:

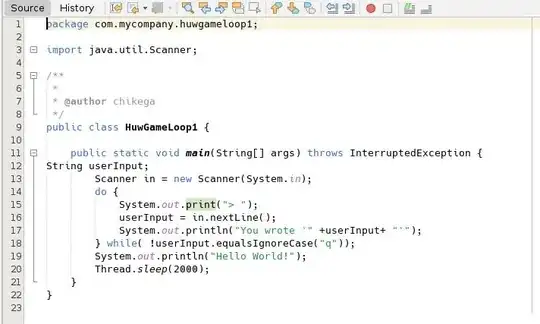

Code Extract (Problematic section is right at the bottom.)

# Create Variables

a, b, x, y = {}, {}, {}, {}

BIG_NUM = 1e15

for i in range(num_bonds):

x[i] = model.addVar(lb = 0, ub = None, vtype="C", name=f"x{i}")

a[i] = model.addVar(lb = 0, ub = None, vtype="C", name=f"a{i}")

b[i] = model.addVar(lb = 0, ub = None, vtype="C", name=f"b{i}")

for t in range(num_periods):

y[t] = model.addVar(lb = 0, vtype="C", name=f"y{t}")

for i in range(num_bonds):

# Buy Trades

model.addCons( a[i] >= p_ask[i] * (x[i] - x_old[i]) )

# Sell Trades

model.addCons( b[i] >= p_bid[i] * (x_old[i] - x[i]) )

# Problematic Section

INDICATOR = model.addVar(vtype="B", name=f"INDICATOR")

model.addCons( quicksum(a[i] for i in range(num_bonds)) + BIG_NUM*INDICATOR <= quicksum(b[i] for i in range(num_bonds)) + BIG_NUM )

model.addCons( INDICATOR * quicksum(a[i] for i in range(num_bonds)) <= turnover_max * quicksum(p_bid[i] * x_old[i] for i in range(num_bonds)) )

model.addCons( quicksum(b[i] for i in range(num_bonds)) <= turnover_max * quicksum(p_bid[i] * x_old[i] for i in range(num_bonds)) + \

INDICATOR * quicksum(b[i] for i in range(num_bonds)) )

Spent absolute days on this, would greatly appreciate any help, thanks.

EDIT:

Interestingly enough, the enabling 2 of the 3 constraints work, i.e.:

# Problematic Section

INDICATOR = model.addVar(vtype="B", name=f"INDICATOR")

model.addCons( quicksum(a[i] for i in range(num_bonds)) + BIG_NUM*INDICATOR <= quicksum(b[i] for i in range(num_bonds)) + BIG_NUM ) # (1) This can be enabled.

model.addCons( INDICATOR * quicksum(a[i] for i in range(num_bonds)) <= turnover_max * quicksum(p_bid[i] * x_old[i] for i in range(num_bonds)) ) # (2) This can be enabled.

model.addCons( quicksum(b[i] for i in range(num_bonds)) <= turnover_max * quicksum(p_bid[i] * x_old[i] for i in range(num_bonds)) + \

INDICATOR * quicksum(b[i] for i in range(num_bonds)) ) # (3) If this is enabled, the code breaks.

HOWEVER should (1) be enabled (i.e. not commented out), the results returned show that all the other constraints not shown in the code is being exceeded, which is strange since the solver is saying it's an optimal solution.