currently, am executing my spark-submit commands in airflow by SSH using BashOperator & BashCommand but our client is not allowing us to do SSH into the cluster, is that possible to execute the Spark-submit command without SSH into cluster from airflow?

Asked

Active

Viewed 867 times

0

Kriz

- 57

- 4

1 Answers

1

You can use DataprocSubmitJobOperator to submit jobs in Airflow. Just make sure to pass correct parameters to the operator. Take note that the job parameter is a dictionary based from Dataproc Job. So you can use this operator to submit different jobs like pyspark, pig, hive, etc.

The code below submits a pyspark job:

import datetime

from airflow import models

from airflow.providers.google.cloud.operators.dataproc import DataprocSubmitJobOperator

YESTERDAY = datetime.datetime.now() - datetime.timedelta(days=1)

PROJECT_ID = "my-project"

CLUSTER_NAME = "airflow-cluster" # name of created dataproc cluster

PYSPARK_URI = "gs://dataproc-examples/pyspark/hello-world/hello-world.py" # public sample script

REGION = "us-central1"

PYSPARK_JOB = {

"reference": {"project_id": PROJECT_ID},

"placement": {"cluster_name": CLUSTER_NAME},

"pyspark_job": {"main_python_file_uri": PYSPARK_URI},

}

default_args = {

'owner': 'Composer Example',

'depends_on_past': False,

'email': [''],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': datetime.timedelta(minutes=5),

'start_date': YESTERDAY,

}

with models.DAG(

'submit_dataproc_spark',

catchup=False,

default_args=default_args,

schedule_interval=datetime.timedelta(days=1)) as dag:

submit_dataproc_job = DataprocSubmitJobOperator(

task_id="pyspark_task", job=PYSPARK_JOB, region=REGION, project_id=PROJECT_ID

)

submit_dataproc_job

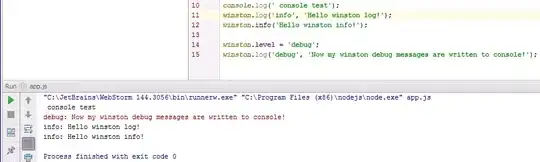

Airflow run:

Airflow logs:

Dataproc job:

Ricco D

- 6,873

- 1

- 8

- 18

-

I could able to test spark-submit for pyspark with the above DAG, I believe this should also work for Scala Jars – Kriz Apr 26 '22 at 03:09

-

I have multiple jars inside the cloud storage bucket these are my env_ jars need them for my scala code, I tried using * to read all Jars something like this `"jar_file_uris": ["gs://xxxxxxx/xxxx/*"]`, am getting this error details: "File not found: – Kriz Apr 26 '22 at 22:51

-

@Kriz you can create a separate question for the "File not found" error when using `"jar_file_uris"` since it is an entirely different question. – Ricco D Apr 27 '22 at 01:26