I am trying to find corners of a square, potentially rotated shape, to determine the direction of its primary axes (horizontal and vertical) and be able to do a perspective transform (straighten it out).

From a prior processing stage I obtain the coordinates of a point (red dot in image) belonging to the shape. Next I do a flood-fill of the shape on a thresholded version of the image to determine its center (not shown) and area, by summing up X and Y of all filled pixels and dividing them by the area (number of pixels filled).

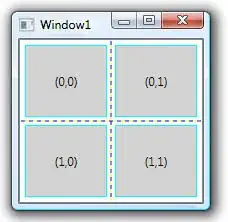

Given this information, what is an easy and reliable way to determine the corners of the shape (blue arrows)?

I was thinking about keeping track of P1, P2, P3, P4 where P1 is (minX, minY), P2 is (minX, maxY), P3 (maxY, minY) and P4 (maxY, maxY), so P1 is the point with the smallest value of X encountered, and of all those P, the one where Y is smallest too. Then sort them to get a clock-wise ordering. But I'm not sure if this is correct in all cases and efficient.

PS: I can't use OpenCV.