I'm trying to get the ROC curve for my Neural Network. My network uses pytorch and im using sklearn to get the ROC curve. My model outputs the binary right and wrong and also the probability of the output.

output = model(batch_X)

_, pred = torch.max(output, dim=1)

I give the model both samples of input data (Am I doing this part right or should it only be 1 sample of the input data not both?) I take the probability ( the _ ) and the labels of what both inputs should be and feed it to sklearn like so

nn_fpr, nn_tpr, nn_thresholds = roc_curve( "labels go here" , "probability go here" )

Next I plot it with.

plt.plot(nn_fpr,nn_tpr,marker='.')

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate' )

plt.show()

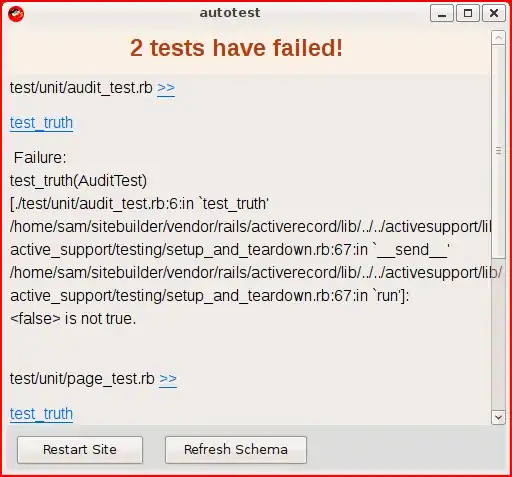

It comes out very accurately which my model is (0.0167% wrong out of 108,000), but I have a concave graph and I have been told it's normally not supposed to be concave. (attached pictures)

I have been using Neural Nets for a while but I have never been asked to plot the ROC curve. So my question is, am I doing this right? Also should it be both labels or just one? All the examples I have seen for neural networks use Keras which if I remember right has a probability function. Therefore I don't know if PyTorch outputs the probability in the way sklearn want's it. For all the other examples I can find aren't for Neural Networks and they have a probability function built in.