I'm learning indexing in PostgreSQL now. I started trying to create my index and analyzing how it will affect execution time. I created some tables with such columns:

also, I filled them with data. After that I created my custom index:

create index events_organizer_id_index on events(organizer_ID);

and executed this command (events table contains 148 rows):

explain analyse select * from events where events.organizer_ID = 4;

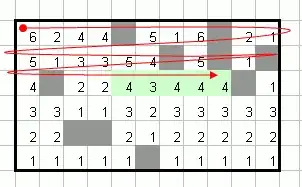

I was surprised that the search was executed without my index and I got this result:

As far as I know, if my index was used in search there would be the text like "Index scan on events". So, can someone explain or give references to sites, please, how to use indexes effectively and where should I use them to see differences?