Here's a distilled version of what we're trying to do. The transformation step is a "Table Input":

SELECT DISTINCT ${SRCFIELD} FROM ${SRCTABLE}

We want to run that SQL with variables/parameters set from each line in our CSV:

SRCFIELD,SRCTABLE

carols_key,carols_table

mikes_ix,mikes_rec

their_field,their_table

In this case we'd want it to run the transformation three times, one for each data line in the CSV, to pull unique values from those fields in those tables. I'm hoping there's a simple way to do this.

I think the only difficulty is, we haven't stumbled across the right step/entry and the right settings.

Poking around in a "parent" transformation, the highest hopes we had were:

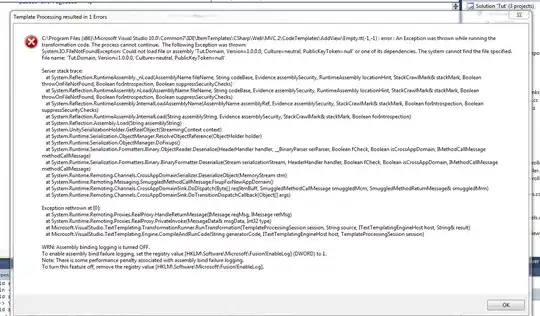

- We tried chaining

CSV file inputtoSet Variables(hoping to feed it toTransformation Executorone line at a time) but that gripes when we have more than one line from the CSV. - We tried piping

CSV file inputdirectly toTransformation Executorbut that only sends TE's "static input value" to the sub-transformation.

We also explored using a job, with a Transformation object, we were very hopeful to stumble into what the "Execute every input row" applied to, but haven't figured out how to pipe data to it one row at a time.

Suggestions?