I am using python with ecs_logg https://www.elastic.co/guide/en/ecs-logging/python/current/installation.html. It output to a file.

Then I am having a logstash reading the logs. Here is an example of the log

{"@timestamp":"2022-03-31T11:55:49.303Z","log.level":"warning","message":"Cannot get float field. target_field: fxRate","ecs":{"version":"1.6.0"},"log":{"logger":"parser.internal.convertor","origin":{"file":{"line":317,"name":"convertor.py"},"function":"__get_double"},"original":"Cannot get float field. target_field: fxRate"},"process":{"name":"MainProcess","pid":15124,"thread":{"id":140000415979328,"name":"MainThread"}},"service":{"name":"Parser"},"trace":{"id":"264c816a6cdd1f92a26dfad80bdc3e91"},"transaction":{"id":"a8a1ed2ab0b38ca0"}}

Here is the config of my logstash:

input {

file {

path => ["/usr/share/logstash/logs/*.log"]

type => "log"

start_position => "beginning"

}

}

filter {

json {

# Move keys from 'message' json log to root level

source => "message"

}

mutate {

id => "Transform"

# Define the environment such as dev, uat, prod...

add_field => {

"environment" => "dev"

}

# Rename 'msg' key from json log to 'message'

rename => {

"msg" => "message"

}

# Add service name from `tag`

copy => {

"tag" => "service.name"

}

}

}

It seems that the logstash didn't index the field and insert into the ELK. As a result the transaction id didn't extracted out and the APM cannot correlated with the logs.

I would like to ask what is the missing part in the logstash config? and how to activate the log correlation.

Thanks

Hi @Colton,

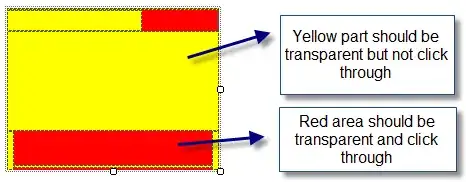

Thanks for your reply, I have a screen shot here and try to clarify the issue.

I see that the document is there. transaction and trace id are there also.

I can also see that types are also exist:

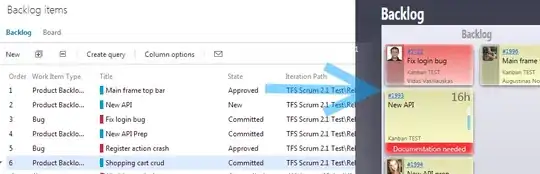

I want to show logs on the APM page:

After searching the apm index, I see for example :

This id exist on both log And I search this transaction id from APM, I can see it there

Index management